Algorithmic bias happens when AI systems make unfair decisions that help some people and harm others. This happens because the data used to train the AI is not fair or complete. If the AI learns from biased data, it keeps repeating the same mistakes in real world decisions. This led to harmful outcomes, unfair treatment and loss of trust in AI systems.

Algorithm bias starts at many points when data is collected, labeled, or when the algorithm is designed. The AI does not treat them equally if the data does not represent all people fairly. Examples of algorithmic bias are facial recognition tools that work worse on dark skin, hiring tools that prefer men and court systems that give higher risk scores to Black people. In healthcare, AI offers less care to minority groups like Black, Latino, Indigenous and Asian populations. Bias also shows up in ads, language tools, image generation and loan approvals.

There are 4 types of bias, such as selection bias, historical bias, measurement bias and implicit bias. These problems come from poor data, unfair design, or not testing the AI well.

Algorithmic Bias causes serious risks like discrimination, legal issues, reputation damage and wrong decisions in health, jobs and law. To avoid bias, teams use diverse data, check for bias, explain how AI works and include human oversight. Laws like the EU AI Act push companies to build fair AI, but AI needs ongoing care to stay fair and safe for everyone.

What is algorithmic bias?

Algorithmic bias happens when computer systems use artificial intelligence (AI) to create unfair results. This bias appears when algorithms give better results to some groups of people over others. It happens because the data used to train the AI is flawed, or the way the algorithms are built is unfair. Algorithmic bias means that fairness is missing and certain groups get benefits while others face problems. This makes existing inequalities in society even worse.

Algorithmic bias occurs at 5 stages, such as data collection, data labeling, feature selection, model design and from data collection to model deployment in AI systems.

Algorithmic bias is present from the beginning of data collection to when the AI system is used. They continue to perpetuate these biases if AI models are trained on biased data.

Algorithmic bias has serious effects in 4 areas, such as in healthcare, where biased AI causes differences in how patients are treated. In criminal justice, risk assessment tools such as risk matrix or decision tree give unfair results based on race, which affects decisions about sentencing and parole. In jobs, automated systems carry over racial biases like implicit bias, explicit bias or individual bias from past data, which leads to unfair treatment of minority applicants. In financial services, credit scoring algorithms make it harder for minority applicants to get loans because of biased patterns in past data.

Algorithmic bias in these systems creates legal risks such as non compliance with anti discrimination laws and data protection regulations and results in reputational harm and loss of public trust. Effective AI governance requires organizations to monitor bias to guarantee fairness and accountability in automated decision making.

What are the examples of algorithmic bias?

The examples of algorithmic bias are bias in facial recognition, hiring, criminal justice, health care, online advertising, language models and more.

The examples of algorithmic bias are given below.

- Algorithmic bias in facial recognition: Facial recognition systems from IBM, Microsoft and Amazon have higher error rates for people with darker skin tones or women due to algorithmic bias, which results in unfair and inaccurate outcomes.

- Algorithmic bias in hiring: Automated hiring tools have favored male candidates over female ones because of algorithmic bias, as they learned from data that men are included in certain jobs.

- Algorithmic bias in criminal justice: Court risk assessment algorithms like COMPAS predict higher reoffending rates for black defendants than white defendants, without using race data, which shows clear algorithmic bias.

- Algorithmic bias in healthcare: Healthcare algorithmic bias recommends less care for minority patients, as they use past spending as a proxy for health needs, which disadvantages minority groups.

- Algorithmic bias in online advertising: AI systems that target ads show certain job or housing ads less to women or minority groups due to algorithmic bias, which strengthens existing stereotypes and increases social inequalities.

What are the types of algorithmic bias?

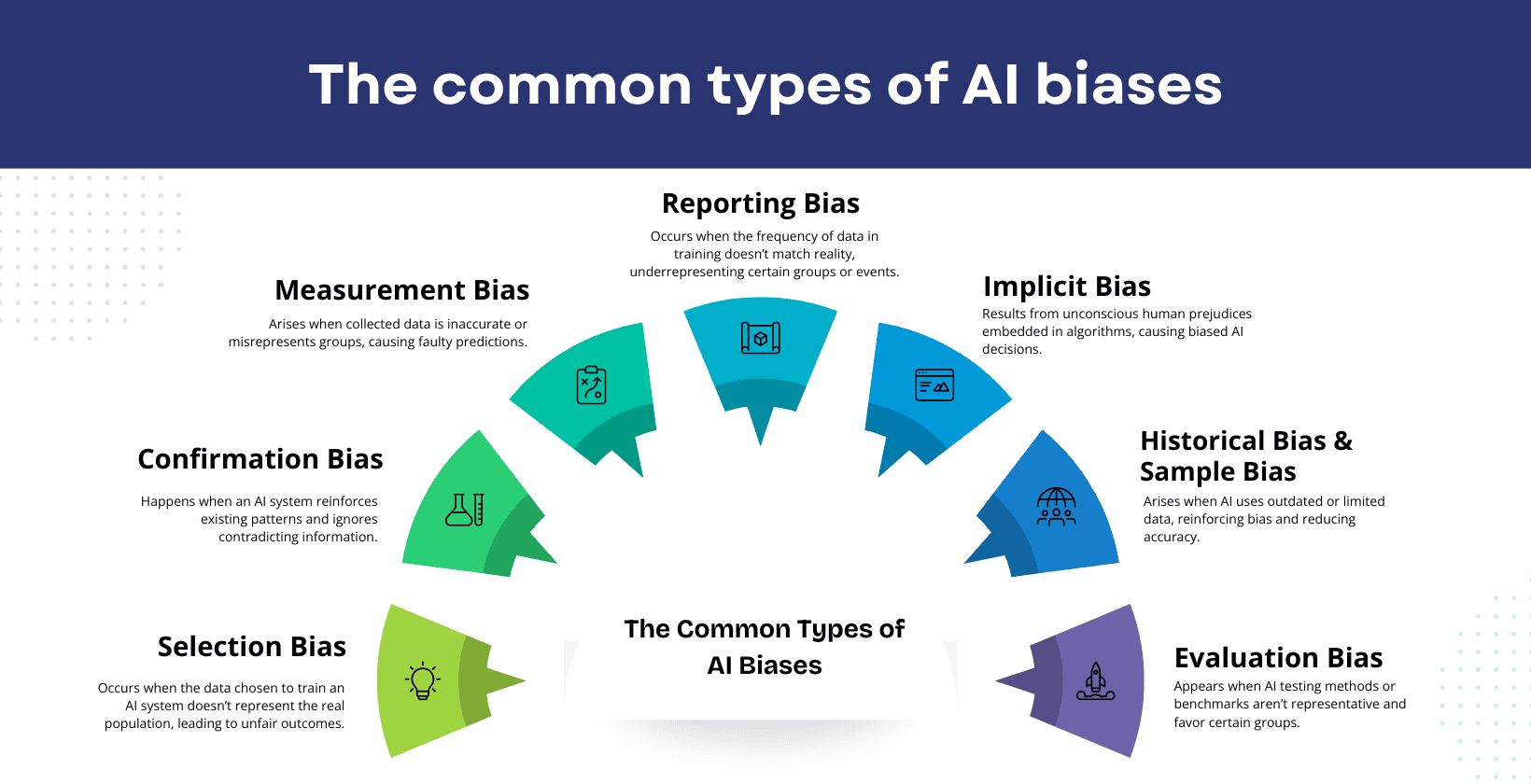

The types of algorithmic basis are selection bias, confirmation bias, measurement bias, reporting bias, implicit bias, historical bias, sample bias, label bias and evaluation bias.

The 9 types of algorithmic bias are listed below.

- Selection bias: Selection algorithmic bias occurs when the data chosen to train an AI system does not accurately represent the real population, which leads to unfair outcomes.

- Confirmation bias: Confirmation algorithmic bias happens when an AI system is designed to support existing patterns, which ignores information that contradicts those beliefs.

- Measurement bias: Measurement algorithmic bias arises when the data collected for training is inaccurate and misrepresents certain groups, which causes the AI to make faulty predictions.

- Reporting bias: Reporting algorithmic bias occurs when the frequency in the training data does not match reality, underrepresenting specific groups or events.

- Implicit bias: Implicit algorithmic bias results from unconscious human prejudices that are embedded in the algorithms, which causes the AI to make decisions that reflect social stereotypes and hidden preferences.

- Historical bias: Historical algorithmic bias emerges when AI systems are trained on outdated data that reflects past inequalities that causes the system to repeat those patterns in current decisions.

- Sample bias: Similar to selection bias, algorithmic bias occurs when the training sample lacks diversity, which makes the AI less accurate for minority groups.

- Label bias: Label based algorithmic bias occurs when humans assign incorrect or subjective labels to training data, which leads to systematic errors in the AI’s predictions.

- Evaluation bias: Evaluation algorithmic bias occurs when the methods or benchmarks used to test AI systems are not representative, when they favor certain groups over others.

What are the causes of algorithmic bias?

The causes of algorithmic bias are biased in training data, bias in algorithmic design, biased evaluation, contextual bias and automation bias.

The causes of algorithmic bias are given below.

- Biased training data: When the data used to train AI reflects human prejudices, the system learns these algorithmic biases and produces unfair results.

- Bias in algorithm design: Algorithmic bias happens if the way algorithms are built includes choices that unintentionally favor socially or economically privileged groups.

- Biased evaluation: When the methods for testing and measuring AI performance are not representative, the system appears accurate but reflects algorithmic bias in real world use.

- Contextual bias: Algorithmic bias arises when an AI system is used in a different context than it was designed for, which causes it to make unfair decisions in new situations.

- Automation bias: People trust AI decisions more than human judgment, even when the AI is wrong, which leads to the acceptance of algorithmic bias results without proper review.

What are the risks of algorithmic bias?

The risks of algorithmic bias are discrimination and inequality, legal and reputational damage, erosion of trust, specific sector impacts and functional issues.

The risks of algorithmic bias are given below.

- Discrimination and inequality: Algorithmic bias causes some algorithms to unfairly disadvantage certain groups based on race, gender, or other traits, which supports existing inequalities and deepens social divides in society.

- Legal and reputational damage: Organizations that use AI systems with algorithmic bias risk lawsuits, government fines and serious harm to their reputation if their technology causes unfair results for people.

- Erosion of trust: When people see that AI systems produce biased results due to algorithmic bias, it leads to a loss of public trust in technology, reputational damage in institutions and decision making processes that rely on AI.

- Specific sector impacts: Algorithmic bias causes harm in critical sectors like healthcare, criminal justice, hiring and finance, which leads to poor health outcomes, unfair legal decisions, or unequal access to jobs and loans.

- Functional issues: AI systems affected by algorithmic bias make inaccurate decisions, which reduces their effectiveness, causing operational failures in organizations that rely on them.

What are the regulations of algorithmic bias?

The regulations of algorithmic bias are algorithmic impact assessments, bias audits, transparency and explainability, disparate impact and treatment and liability and implementation.

The regulations on algorithmic bias are given below.

- Algorithmic impact assessments: Organizations assess bias risks before using AI systems, consider impacts on all groups and record steps taken to prevent unfair outcomes.

- Bias audits: Regular, systematic checks are required to identify and correct algorithmic bias in AI tools. These audits help secure compliance with anti discrimination laws and protect against legal and reputational risks.

- Transparency and explainability: AI systems need to clearly explain how decisions are made so users and regulators understand, question and trust the results, which is essential for finding and fixing algorithmic bias.

- Disparate impact and treatment: Laws in the United States ban automated systems from causing unfair disadvantages to protected groups through algorithmic bias, even without intent. These laws address both direct discrimination and indirect discrimination.

- Liability and enforcement: Developers and organizations are legally responsible if their systems cause harm through algorithmic bias and penalties, lawsuits, or regulatory actions implement accountability and require them to meet fairness standards.

How to avoid algorithmic bias?

To avoid algorithmic bias, follow some methods such as diverse and representative data, bias detection and mitigation, transparency and interpretability, inclusive design and development, human oversight and ethical AI frameworks.

The methods of avoiding algorithmic bias are listed below.

- Diverse and representative data: Training data needs to reflect the full diversity of the population, including different ages, genders, races and backgrounds, so minority groups are not unfairly treated.

- Bias detection and mitigation: Regularly audits and tests for algorithmic bias in AI systems and if bias is found, organizations must retrain models, adjust algorithms, or use fairness focused methods to fix it.

- Transparency and interpretability: AI systems must provide clear explanations for their decisions, which helps users and regulators understand, trust and question the results. This makes it easier to spot and prevent algorithmic bias.

- Inclusive design and development: The design and review of AI systems involve people from different backgrounds, including ethicists, domain experts and affected communities, to catch and address potential algorithmic bias early.

- Human oversight: Human review and oversight are important for monitoring AI decisions, in high stakes situations, so errors or algorithmic bias are quickly found and corrected.

- Ethical AI frameworks: Follow ethical guidelines and standards, such as fairness, accountability and respect for human rights, to guide AI development and use. These frameworks help organizations act responsibly and avoid causing harm through algorithmic bias.

Do regulations help avoid algorithmic bias?

Yes, regulations help to avoid algorithmic bias by requiring ethical oversight, fairness audits and ongoing monitoring of AI systems. Legal and regulatory measures set clear standards for transparency, impact assessments and accountability, which makes organizations regularly check for and fix bias. These steps help prevent unfair outcomes and protect individuals from discrimination in automated decision making.

Why is evaluating data quality crucial for avoiding algorithmic bias?

Evaluating data quality is essential for avoiding algorithmic bias because high-quality data accurately reflects reality, which reduces the risk of “bias in, bias out”. Poor or incomplete data creates feedback loops, where biased results support unfair patterns. Good data quality helps make systems fair and ethical, which reduces the risk of unfair outcomes for different groups.

Why is continuous data curation essential for reducing algorithmic bias?

Continuous data curation is essential for reducing algorithmic bias because it keeps data quality high, secures fair representation of all groups and directly improves model performance and accuracy in AI development. Regularly updating and reviewing data helps systems adapt to new data sources and changing realities, supports ethical standards and builds trust by making sure models stay fair, accurate and relevant as data evolves.

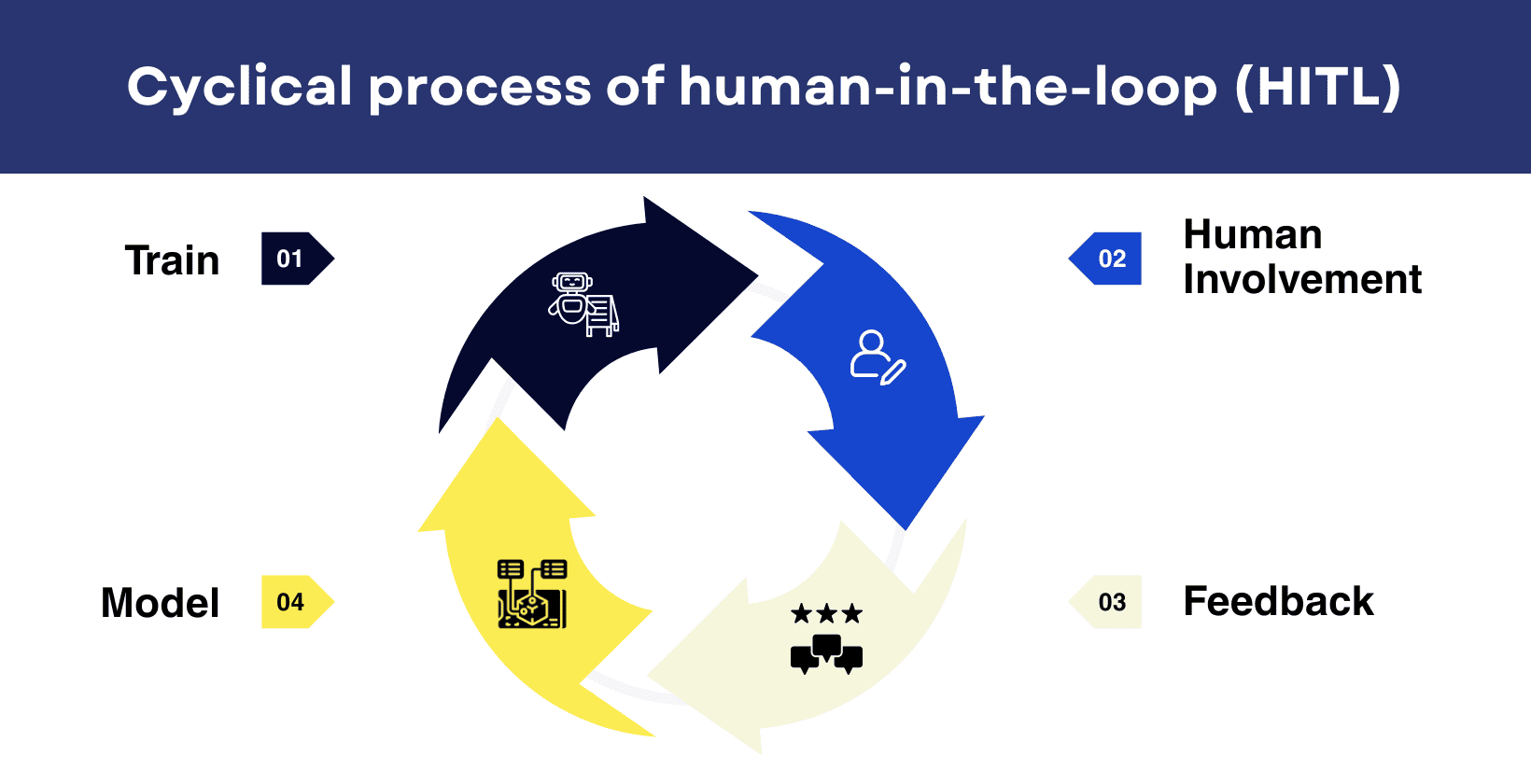

How does human-in-the-loop curation enhance fairness in AI algorithms?

Human-in-the-loop (HITL) means people are directly involved in training, evaluating and guiding automated systems by giving feedback, correcting errors and overseeing decisions to make results fair and accurate.

Human involvement helps detect and fix bias, adds real world context and secures ethical standards are followed, which supports fairness and equity for all groups. Continuous feedback from people improves model accuracy, keeps decisions transparent and builds trust by showing by revealing the reasoning or processes behind model decisions. diverse perspectives such as from different races, genders and cultures, help spot hidden bias and make AI more trustworthy and fair for everyone.

Can AI Algorithms Avoid Discrimination?

No, AI algorithms cannot fully avoid discrimination on their own because they learn from biased data, lack contextual understanding and require human oversight to identify unfair outcomes. Even with algorithmic audits, inclusive development and legal liability frameworks, biases still arise from flawed data, design choices or unintended disparate impact such as facial recognition errors, discriminatory healthcare prioritization, or biased hiring filters. Ongoing human oversight, diverse data and regular audits are essential to minimize algorithmic discrimination, but complete prevention remains a significant challenge.

What is the EU AI Act algorithmic discrimination?

The EU AI Act defines algorithmic discrimination as unfair treatment such as biased decisions in hiring, lending or law enforcement caused by AI systems, those labeled as “high risk,” which harms people’s fundamental rights like equality and non discrimination. The Act requires that high risk AI systems be designed and monitored to prevent discriminatory outcomes, which follows GDPR rules and bans certain harmful AI practices to protect everyone’s rights.