The European Union (EU) Artificial Intelligence Act is a binding legal structure to guide the responsible development and use of AI technologies across the European Union. This applies to both European startups and global AI providers like Vosu.ai, OpenAI or Google whose systems impact the EU market, as confirmed by the EU Official Journal (OJ L 2024/212). The EU AI regulation classifies AI systems by risk level such as unacceptable, high, limited and minimal. High risk systems are used in sectors like healthcare, infrastructure or law enforcement. Limited risk systems are required to inform users that they are interacting with AI. Minimal risk systems such as spam filters, are exempt from additional legal duties.

The EU AI Law entered into force on August 1, 2024, but its provisions phased in through 2027. Bans on unacceptable and high risk systems begin in 2025, with full compliance expected by August 2, 2026. The EU AI Act requires record keeping, fundamental rights impact assessments, AI training for relevant personnel and special safeguards for general purpose AI models.

The scope of the EU AI Act is wide, which applies to all providers, deployers, importers and distributors of AI systems that impact the EU market, regardless of geographic origin. Penalties for non-compliance are substantial, reaching up to €35 million or 7% of global turnover for severe violations. The impact of the European Union AI Act is visible in sectors like healthcare, finance, education and public administration, promoting ethical, transparent and trustworthy AI adoption.

What is the EU AI Act?

The EU Artificial Intelligence Act (EU AI Act) is the European Union’s binding legal framework designed to regulate the development, deployment and use of artificial intelligence systems throughout the EU. This act is based on a risk classification system that categorizes AI applications into four levels such as unacceptable, high, limited and minimal risk. These levels depend on their potential impact on health, safety and fundamental rights. AI systems considered to pose an unacceptable risk such as those involving manipulative behavior control or indiscriminate social scoring, are banned.

The EU AI Act is aimed at securing safety, upholding rights and promoting trustworthy AI aligned with EU values. Its implementation is phased, with key rules taking effect. The scope is broad, which applies to all AI providers whose systems impact EU users or markets, regardless of location.

When did the EU AI Act start?

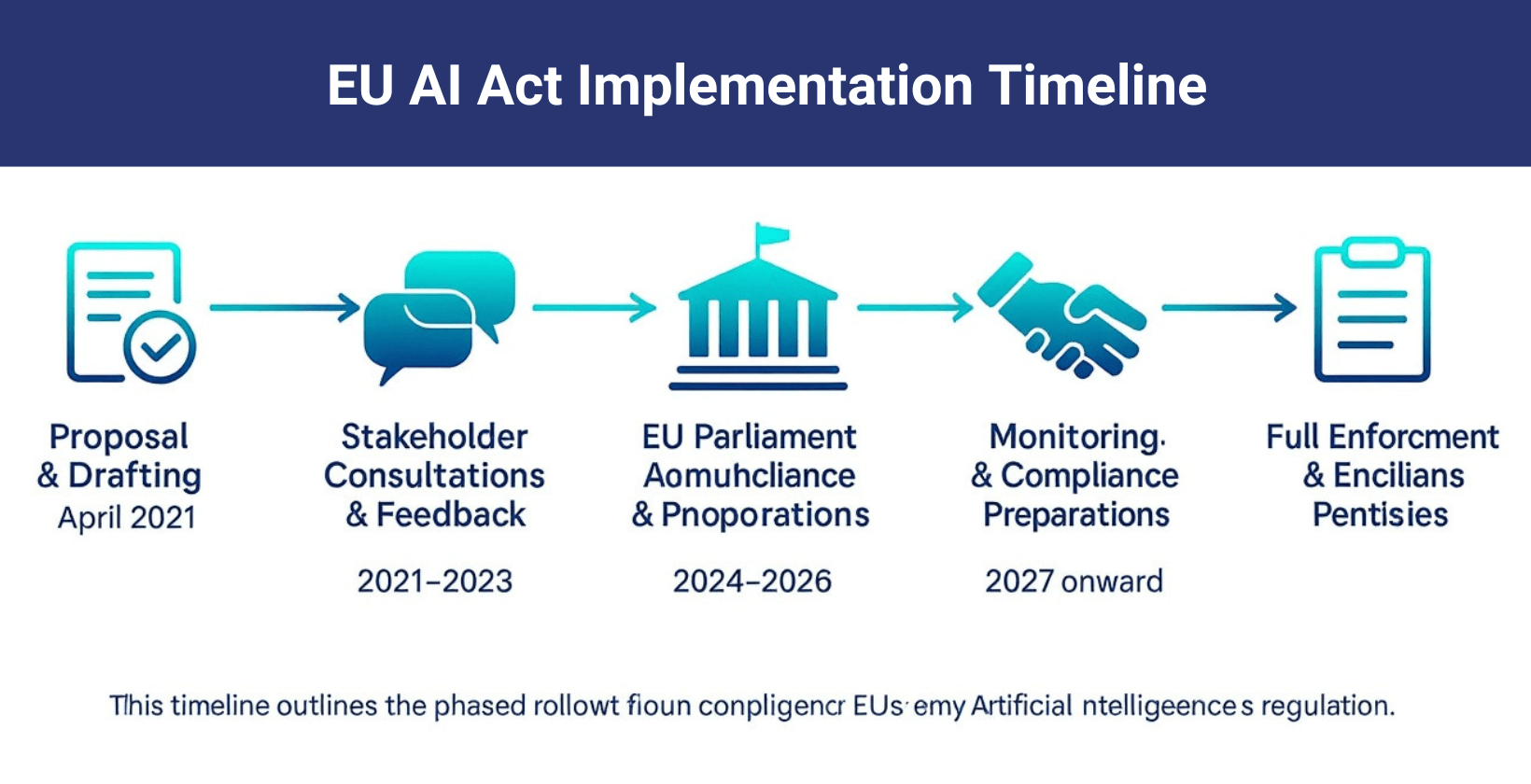

The EU Artificial Intelligence Act (EU AI Act) started on August 1, 2024, which establishes the legal basis for regulating AI across the European Union. Its implementation followed a phased timeline. The act imposes prohibitions on AI systems as of February 2, 2025, that pose unacceptable risks such as manipulative behavior and social scoring. Legal requirements for general purpose AI models and large foundation models become binding on August 2, 2025, to confirm regulatory compliance.

The EU AI Act applies most other obligations by August 2, 2026, especially for high risk AI systems used in sensitive areas such as healthcare and law enforcement. The final deadline, August 2, 2027, applies to high risk AI systems already in use and some general purpose models. This gradual rollout under the EU AI Act timeline allows organizations to prepare while assuring compliance. National authorities, the European Artificial Intelligence Board and the EU AI Office coordinate implementation across the EU.

What requirements are set by the EU AI Act?

The requirements which are set by the EU AI Act include prohibited AI practices, high risk AI systems, general purpose AI, AI literacy and sanctions for non-compliance.

The requirements set by the EU AI Act are outlined below.

- Prohibited AI practices (Unacceptable Risk): The EU AI Act prohibits AI systems that allow manipulative behavioral control, exploit vulnerabilities or conduct social scoring, as these pose unacceptable risks to individuals' rights and safety.

- High risk AI systems: The EU AI Act requires complete risk assessments, detailed documentation, third party conformity assessments, human oversight and ongoing post market monitoring for AI used in sectors like healthcare, law enforcement and infrastructure.

- General purpose AI (GPAI): The EU AI Act imposes technical documentation, copyright transparency, risk management and for models of systemic risk, stricter obligations on providers placing general purpose AI models like vosu.ai on the EU market.

- Transparency obligations: The EU AI Act mandates that users are informed when they interact with AI such as chatbots or deepfakes and that generated content is disclosed as artificial rather than human made.

- Record keeping: The EU AI Act requires providers of high risk AI to maintain automatic logs and detailed records of system use, which facilitate traceability, monitoring and accountability throughout deployment.

- Compliance with fundamental rights: The EU AI Act requires all AI systems, especially high risk ones, to undergo fundamental rights impact assessments to protect privacy, non-discrimination and human dignity by EU principles.

- AI literacy: The EU AI Act obliges providers and deployers to make sure their staff have sufficient AI literacy, which promotes informed deployment and awareness of AI risks and opportunities across organizations.

- Governance and enforcement: The EU AI Act establishes oversight by national authorities, the European Artificial Intelligence Board and the EU AI Office to guide implementation, monitor compliance and coordinate enforcement and best practices.

- Sanctions for non-compliance: The EU AI Act imposes substantial penalties for breaches, with fines up to €35 million or 7% of global turnover.

What are the EU AI Act's prohibited practices?

The EU AI Act's prohibited practices are outlined below.

- Harmful manipulation and deception: The EU AI Act bans AI systems using subliminal, manipulative or deceptive techniques that distort user behavior, which impairs informed decisions and potentially causes significant harm.

- Exploiting vulnerabilities: The EU AI Act prohibits AI systems from exploiting vulnerabilities relating to age, disability or socio-economic status to manipulate decision making, which leads to significant likely harm for affected individuals or groups.

- Social scoring: The EU AI Act outright bans AI that evaluates or ranks people based on social behavior or personal characteristics, which results in unjust or disproportionate treatment commonly called social scoring.

- Criminal risk assessment: The EU AI Act prohibits AI systems that predict criminal behavior based primarily on profiling or assessing personality traits or characteristics of individuals.

- Facial recognition databases: The EU AI Act forbids creating or expanding facial recognition databases through the indiscriminate collection or scraping of facial images from the internet or CCTV footage by AI systems.

- Emotion recognition in sensitive contexts: The EU AI Act prohibits using AI to infer emotions of individuals at work or in education, except for health or safety reasons, to safeguard privacy and dignity.

- Biometric categorization: The EU AI Act strictly bans AI systems that categorize individuals based on biometric or sensitive personal data such as race, religion or sexual orientation.

- Real time remote biometric identification: The EU AI Act prohibits AI use for real-time remote biometric identification in public spaces, except under strict law enforcement conditions with defined safeguards.

Who are the actors in the EU AI Act?

The actors in the EU AI Act include providers, deployers, importers, distributors, product manufacturers and authorized representatives.

6 actors in the European Union AI Act are outlined below.

- Providers: Providers under the EU AI Act are entities that develop or have developed AI systems or models and place them on the EU market under their name or trademark.

- Deployers: Deployers in the EU AI Act use AI systems professionally under their authority, except for personal or non-professional activities that confirm compliance during deployment.

- Importers: Importers under the EU AI Act are EU based entities placing AI systems from non-EU providers on the EU market, which secures that those systems meet compliance.

- Distributors: Distributors defined by the EU AI Act are entities in the supply chain, other than providers or importers, making AI systems available on the EU market.

- Product manufacturers: Product manufacturers in the EU AI Act are companies integrating AI systems into their products, responsible for securing compliance if the AI forms a key safety component.

- Authorized representatives: Authorized representatives under the EU AI Act are EU based agents appointed by non-EU providers, tasked with securing documentation and cooperation with competent authorities.

What is a risk based approach in the EU AI Act?

The EU Artificial Intelligence Act (EU AI Act) uses a risk based approach, which means regulatory obligations depend on how much risk an AI system poses to health, safety and fundamental rights. The law defines four AI Act risk categories such as unacceptable, high, limited, minimal or no risk. Systems with unacceptable risk such as manipulative behavior control, social scoring or certain biometric surveillance, are banned.

High risk AI systems used in sectors like healthcare, law enforcement, employment and infrastructure must meet strict requirements, which include risk assessments, technical documentation, conformity checks and ongoing human oversight. Limited risk systems, such as chatbots and creative assistants, must clearly disclose AI involvement to users. Platforms like Vosu.ai fall into this category when offering generative art, text or video tools for non-sensitive applications. Minimal risk AI systems face no mandatory requirements, but follow voluntary standards. This AI Act risk classification guarantees that serious risks are tightly regulated, while lower risk systems are lightly governed to support innovation.

What penalties does the EU AI Act impose for non-compliance?

The EU AI Act imposes a strong system of administrative fines for non-compliance, which uses a tiered approach based on the type and severity of the violation. The severest penalties are for breaches of Article 5, which covers prohibited AI practices such as unacceptable risk systems such as social scoring or manipulative biometric identification. These violations result in fines of up to €35 million or 7% of global annual turnover, whichever is higher. Non compliance with other key requirements such as those for high risk AI systems, which cover risk management, transparency and human oversight, results in fines reaching €15 million or 3% of turnover. Incorrect, incomplete or misleading information provided to authorities results in fines up to €7.5 million or 1% of turnover. General purpose AI model providers, along with EU union bodies and institutions, face specific penalties customized to their roles under the Act.

How does the EU AI Act impact the financial services sector?

The EU AI Act impacts the financial services sector by imposing a comprehensive regulatory framework on the development and use of artificial intelligence. Financial organizations such as banks, insurers and fintechs must comply with strict rules when using AI systems in high risk domains such as credit scoring, fraud detection and risk assessment.

The EU AI Act key impacts include greater transparency and accountability, as institutions provide detailed documentation and clear explanations of AI driven outcomes. Stringent requirements apply to high risk AI systems that must undergo rigorous risk assessments, continuous monitoring and measures to prevent discrimination, in areas like credit evaluation. The Act highlights data governance, which requires high quality, representative and traceable data throughout the AI lifecycle. They support responsible innovation while these obligations increase compliance complexity by ensuring a trustworthy environment for AI adoption. Penalties for non-compliance up to €35 million or 7% of global turnover, which encourages financial institutions to prioritize both legal compliance and ethical AI practices.

Which industries are most impacted by the EU AI Act?

The most impacted industries by the EU AI Act are outlined below.

- Healthcare: The EU AI Act targets high risk AI in healthcare, which regulates diagnostic tools to secure safety, transparency and robust data governance.

- Financial services: The EU AI Act classifies AI in financial services like credit scoring and risk assessment as high risk, which imposes strict compliance and monitoring.

- Public administration and law enforcement: The EU AI Act tightly regulates AI in public administration and law enforcement, for biometric ID and criminal risk assessment, to prevent misuse.

- Education: The EU AI Act treats AI systems in education such as grading or admissions, as high risk, which imposes strict standards for fairness and transparency.

- Creative and tech industries: The EU AI Act covers creative and tech industries through content generation, recommendation engines and generative AI to follow transparency, compliance and monitoring for prohibited practices.

Does the EU AI Act apply to US companies?

Yes. The EU AI Act applies to US companies if their AI systems or outputs are offered in the EU or impact EU residents. Its extraterritorial scope, Article 2, extends obligations globally, regardless of company location. This means providers such as OpenAI, Google or Vosu.ai must comply when their AI models are deployed in the EU market.

Has the EU AI Act been passed?

Yes, the EU AI Act has been passed as the European Parliament adopted the Act on March 13, 2024 and it was published in the EU’s Official Journal on July 12, 2024. The EU AI Act starts enforcement with bans on unacceptable-risk systems from February 2025. Its obligations for general purpose AI models apply from August 2025 and requirements like risk management or data governance are fully effective by August 2026.

Why are rules needed for AI?

Rules are needed for AI to make sure its development and deployment are safe and secure, preventing misuse and harm. Regulations help address biases, protect privacy and guarantee ethical standards. Rules boost public trust, encourage responsible innovation and help mitigate potential negative societal impacts by fostering accountability and transparency, as AI becomes increasingly integrated into daily life and decision making.

What are the rules for general purpose AI (GPAI) models?

The rules for general purpose AI (GPAI) models are outlined below.

- Transparency and documentation: GPAI providers must maintain detailed technical documentation and publish summaries of training data to secure model transparency.

- Copyright compliance: GPAI providers must implement policies to comply with EU copyright law, respecting rights and reservations on data used for training.

- Systemic risk management: GPAI providers must assess and mitigate risks, report incidents and guarantee strong cybersecurity for GPAI models posing systemic risk.

- General obligations: GPAI providers must share relevant information with downstream users and adhere to the AI Act’s general obligations, which assure responsible AI use.