Perception in artificial intelligence agents means a system senses and interprets data from its environment through various sensors such as cameras, microphones, or touch devices, to guide decisions and actions.

AI agents use perception to collect sensory inputs, extract key features and interpret data using techniques like machine learning or natural language processing. Based on this interpretation, they make decisions and perform actions that impact their environment. This perception action cycle forms a continuous loop, letting agents adapt and respond to changes in real time.

Types of perception in AI include visual perception for recognizing objects and spatial relationships, auditory perception for processing sounds and speech and textual perception for understanding written language. Environmental perception combines multiple sensory inputs to create a comprehensive model of the surroundings.

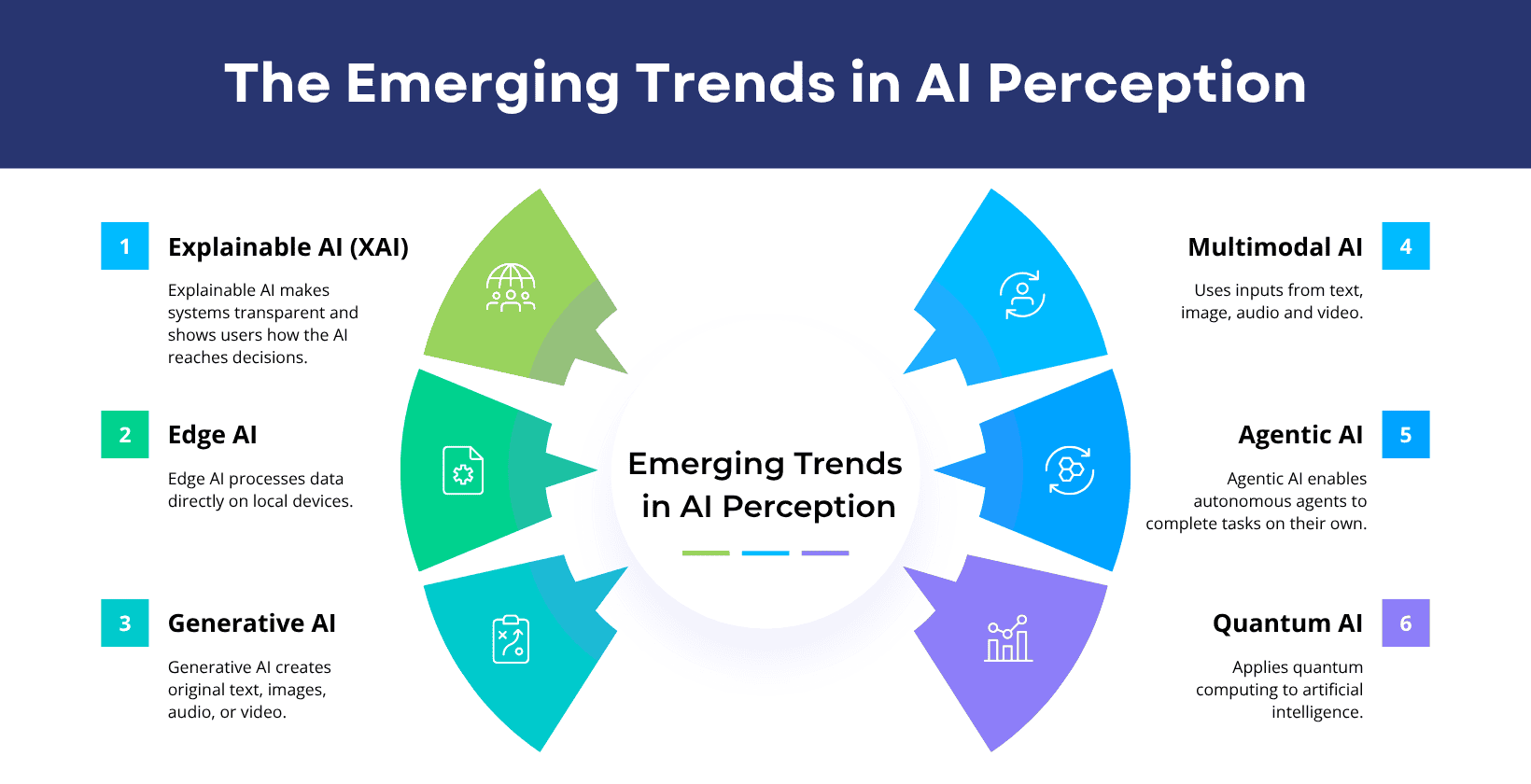

Predictive perception uses machine learning to anticipate future events and inform proactive decisions. Emerging trends in AI perception include explainable AI for transparency, edge AI for faster and private processing, generative and multimodal AI for richer content and understanding, agentic and quantum AI for advanced autonomy and computational power and conversational and surveillance AI for natural interaction and enhanced security. These trends drive the evolution of machine perception, making AI agents more adaptive, autonomous and effective in complex environments such as robotics, autonomous vehicles and smart city systems.

What is perception in artificial intelligence (AI) agents?

Perception in artificial intelligence (AI) agents refers to the process through which an AI powered agent detects, receives and interprets sensory inputs from its surroundings to make decisions and take actions.

An AI agent uses perception to sense its surroundings and understand the current state of the environment. It does this by collecting raw data through sensors or input channels, converting that data into meaningful information and interpreting it effectively.

Perception allows the agent to track changes, evaluate conditions and take actions to accomplish complex tasks like autonomously navigating a self driving car through city streets while avoiding obstacles, obeying traffic laws and adapting to changing road conditions. Without perception, the AI agent cannot respond to new situations, adjust to unexpected events, or make informed decisions.

A Percept refers to a single unit of sensory input that an AI agent detects at a specific point in time. For example, a camera capturing an image, a microphone detecting sound, or a sensor reading temperature all produce percepts.

A Percept sequence refers to the complete history of all percepts an AI agent has received over time. This sequence provides context, helps the agent track patterns and supports better decision making in complex and evolving environments.

Perception allows AI powered agents to interact with real world settings, interpret sensory data and respond effectively, even when challenges arise.

What are the processes of perception in artificial intelligence agents?

The processes of perception in artificial intelligence agents include 5 key steps, which are data acquisition, data preprocessing, information interpretation, decision making and action execution.

The processes of perception in artificial intelligence agents are explained below.

- Data acquisition: AI agents use sensors such as cameras, microphones, or LiDAR to capture sensory input from their environment.

- Data preprocessing: Artificial intelligence agents clean, normalize and transform the collected data to remove noise and prepare it for analysis. They use convolutional neural networks to extract important features from visual or auditory inputs.

- Information interpretation: Artificial intelligence agents apply machine learning algorithms, natural language processing or inference models to interpret the preprocessed data. This process allows them to recognize objects, understand speech and identify patterns necessary for understanding the environment.

- Decision making: Artificial intelligence agents evaluate available actions based on the interpreted information. They use learning and other decision models to choose the best response that aligns with their user engagement, operational costs or reacts effectively to autonomous vehicles adjusting to sudden obstacles.

- Action execution: Artificial intelligence agents execute the chosen action through actuators or output mechanisms such as motors or speakers. This step completes the perception action loop and allows them to respond to and interact with their environment.

What is perception action cycle in artificial intelligence?

The perception action cycle in artificial intelligence refers to the continuous, interactive loop where an AI agent senses its environment, processes sensory information and takes action based on that understanding. This cyclical process allows AI agents to adapt their behavior in real time, as each action changes the environment and generates new sensory input for the next cycle.

This cycle depends on AI perception, which serves as the basis for all subsequent actions. By constantly observing, interpreting and responding to sensory data, AI agents adjust to changing conditions, learn from feedback and complete tasks in tough conditions.

What are the types of perception in artificial intelligence?

There are 4 types of perception in artificial intelligence, which are visual perception, auditory perception, textual perception and environmental perception.

The 4 types of perception in artificial intelligence agents are given below.

- Visual perception: Visual perception interprets visual data from cameras or other visual sensors, which allows AI agents to recognize objects, detect motion and understand spatial relationships like left of, right of or above in their environment.

- Auditory perception: Auditory perception refers to processing sound data captured by microphones or audio sensors that allows agents to recognize speech, detect environmental sounds and localize sound sources.

- Textual perception: Textual perception enables AI agents to analyze written language or text based data, extract meaning, interpret commands and respond in natural language interactions.

- Environmental perception: Environmental perception is the ability to sense and interpret broader environmental factors such as temperature, humidity, or physical obstacles, which uses sensors to help agents adapt to changing conditions.

1. Visual perception

Visual perception in AI is the ability of machines to process, interpret and understand visual information such as images and videos, using sensors and advanced algorithms like convolutional neural networks (CNNs). It is important because it allows AI agents to interact with their surroundings, powering applications like image recognition, image segmentation and autonomous vehicles. Its key principles include image acquisition, pre-processing, feature extraction and classification. Visual perception allows AI to automate complex tasks, make real time decisions and achieve human like performance in fields such as healthcare, robotics and self driving cars.

2. Auditory perception

Auditory perception in AI is the ability of machines to receive, interpret and understand sounds like speech or music by using microphones and recurrent neural network (RNN) algorithms. This capability of AI is important to enable voice based communication, natural language processing and automatic speech recognition. The principles of auditory perception include sound acquisition, pre processing to remove noise, feature extraction (like pitch and frequency) and pattern recognition using deep learning. Applications in AI agents start from virtual assistants and smart speakers to real time transcription, voice command interfaces and sound detection, which makes interactions with technology more natural and accessible.

3. Textual perception

Textual perception in AI means a system uses natural language processing to read, interpret and understand written language or text data. Textual perception matters because it lets AI extract meaning, context and intent from large volumes of text, which supports smarter communication and automation. The components of textual perception include semantic understanding and named entity recognition. AI agents use textual perception for chatbots, virtual assistants, information extraction, sentiment analysis and automated content generation.

4. Environmental perception

Environmental perception in AI means a system uses computer vision, machine learning and sensory inputs to interpret and understand its surroundings by integrating data from multiple sources for a multimodal view. AI agents use surrounding perception for tasks like autonomous driving and environmental monitoring, but face challenges with sensor reliability, complex data fusion and adapting to unpredictable environments.

5. Predictive perception

Predictive perception in AI means an agent uses machine learning and probabilistic models to analyze past and current data, anticipate future events and inform decisions. Predictive perception lets AI systems forecast trends, detect risks and optimize actions in areas like healthcare, finance and logistics. Unlike traditional perception, which only interprets present data, predictive perception enables proactive, data driven responses by providing foresight and adaptability.

What are the emerging trends in AI perception?

The merging trends in AI perception focus on making machines smarter, faster, more autonomous and easier to understand.

The emerging trends in AI perception are given below.

- Explainable AI(XAI): Explainable AI makes systems transparent and shows users how the AI reaches decisions. It builds trust and helps organizations meet rules in fields like healthcare, finance and compliance.

- Edge AI: Edge AI processes data directly on local devices. It delivers faster responses and keeps data private. Industries use Edge AI in autonomous vehicles and smart cameras where they need low delay and high security.

- Generative AI: Generative AI creates original text, images, audio, or video through models like GPT, Vosu.ai and Dall·E. It reshapes creative fields, powers real time content production and supports science and design.

- Multimodal AI: Multimodal AI uses inputs from text, image, audio and video to understand complex situations. It improves tools like virtual assistants and robots with deeper, context aware insights.

- Agentic AI: Agentic AI enables autonomous agents to complete tasks on their own, work with other agents and adapt to new scenarios. This trend supports AI that acts with purpose in business and daily life.

- Quantum AI: Quantum AI applies quantum computing to artificial intelligence. It solves hard problems faster than regular systems and supports progress in optimization, encryption and science.

How are startups approaching AI perception?

The approaches startups take towards AI perception are given below.

- Automate tasks: Startups cut manual work by handling tasks like quality checks, medical tests and customer service.

- Personalize services: Startups shape products to match each user's needs through direct choices and clear preferences in retail, media and education.

- Build smart tools: Startups design tools that watch, decide and act in offices, homes and industrial sites without human help.

- Solve practical problems: Startups tackle issues like disease detection, machine failure, pollution tracking and inventory control with real, tested solutions.

How perception in artificial intelligence is changing the world?

The ways that AI perception is changing the world are given below.

- Enhance visual perception: Visual perception in AI helps machines detect objects, read images and perform tasks in fields like healthcare, security and transportation.

- Improve auditory perception: Auditory perception in AI allows systems to understand speech, identify sounds and support voice communication, making technology easier to use and more responsive.

- Influence human perception: AI driven perception expands human ability, offers clear insights, supports decisions and fills gaps in understanding complex data and environments.

Are there any differences between spatial awareness and perception in AI?

Yes, spatial awareness and perception in AI are different because spatial awareness focuses on understanding spatial relationships and object positions in three dimensional space, while perception involves collecting and interpreting sensory data for tasks like object recognition, speech recognition, or image analysis. Spatial awareness uses perception as input but centers on mapping, navigation and reasoning about space.

Does an AI agent collect and perceive data?

Yes, an AI agent does collect and perceive data because it uses sensors or data input to gather information from its surroundings, processes this data to understand the situation and then makes informed decisions based on that understanding. This ability allows the agent to interact with its surroundings and achieve its goals.

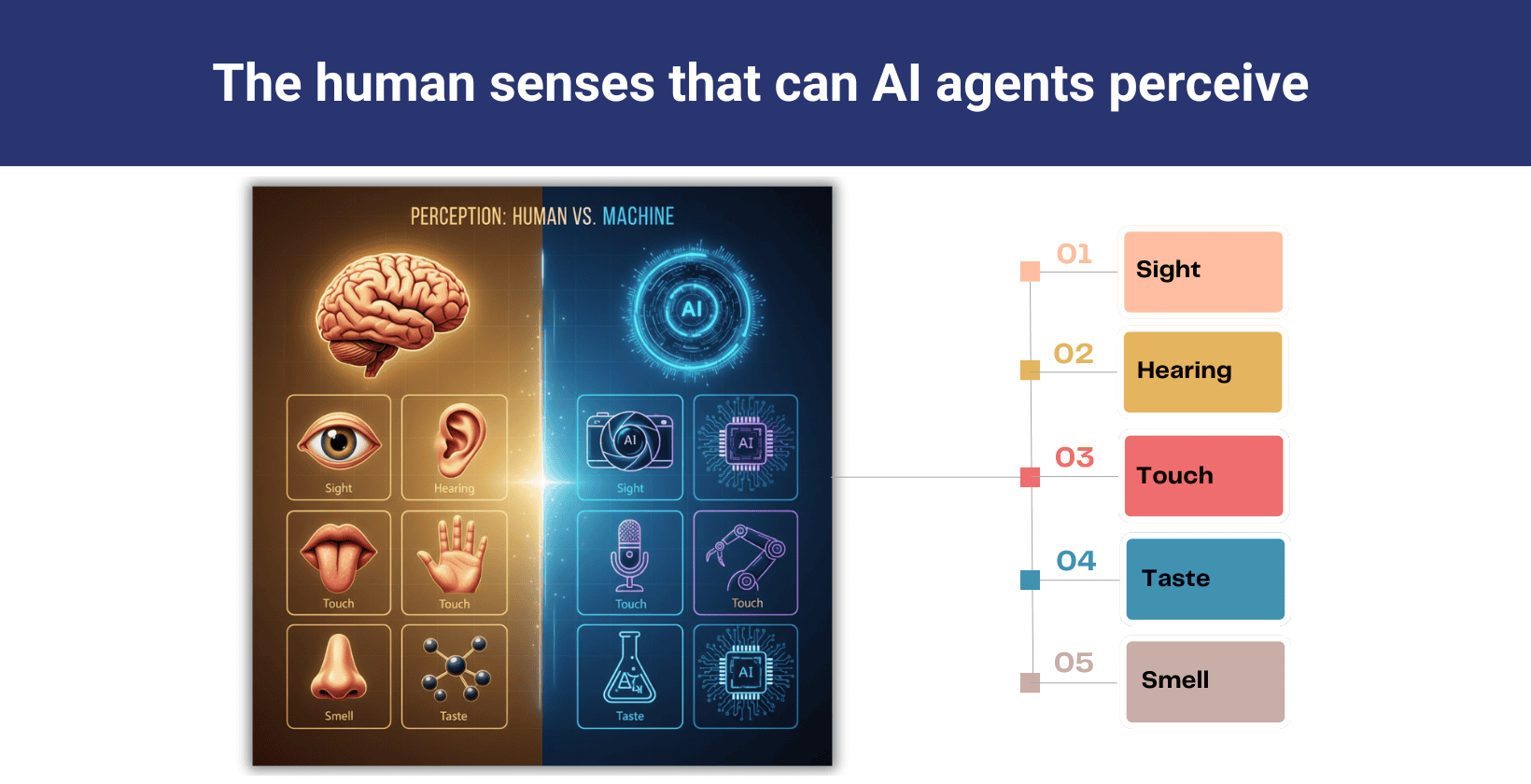

What human senses can AI agents perceive?

AI agents perceive human senses such as sight, hearing, touch, taste and smell. They use cameras for sight, microphones for hearing, tactile sensors for touch, chemical sensors for taste and gas sensors for smell. These tools help AI agents understand and interact with their surroundings accurately.

The human senses that AI agents can perceive are outlined below.

- Sight: AI agents use cameras and apply computer vision to interpret images and videos. They recognize objects, understand scenes and navigate spaces.

- Hearing: AI agents use microphones and process audio to recognize speech, detect sounds and analyze audio for communication and awareness.

- Touch: AI agents use tactile sensors to detect pressure, texture and vibrations. They interact with physical objects and sense contact.

- Taste: AI agents use electronic tongues or chemical sensors to analyze flavors and identify compositions for food safety and quality control.

- Smell: AI agents use electronic noses or gas sensors to detect and identify odors or chemical signatures in safety, healthcare and environmental contexts.

How does sight impact perception in AI agents?

The ways that sight impacts perception in AI agents are given below.

- Information gathering: AI agents use sight to collect large amounts of visual data from their surroundings. They use this input to understand and interact with the world.

- Object recognition: AI agents use sight to identify, classify and distinguish objects, shapes and patterns in images or video. This supports complex decision tasks such as navigation, automation and scene understanding.

- AI Agent behavior: Visual perception shapes how AI agents respond to their surroundings. They navigate, avoid obstacles and take context-aware actions in real time.

- Learning adaptation: AI agents use sight to learn from visual experiences. They improve accuracy and adapt through repeated exposure to new visual data.

Does an AI agent perceive time like humans?

No, an AI agent does not perceive time like humans because it uses timestamps and processing speed to track events, but it lacks a sense of duration or subjective experience. Humans feel time pass and remember events emotionally, while an AI agent has no awareness or feeling, only records and processes sequences of data.