AI voice, also known as artificial intelligence voice, is the ability of computers to produce human like speech that sounds natural and expressive. AI systems use spoken language to interact with users that allows natural, human centered communication. This AI verbal interface allows machines to process voice inputs, interpret intent and respond conversationally.

AI voice mechanism operates through a combination of natural language processing (NLP) and text to speech (TTS) synthesis. NLP interprets voice meaning and TTS transforms the response back into speech. This AI process produces natural sounding voices of real human speakers.

AI top voice applications involve virtual assistants and entertainment solutions. Virtual assistants like Siri and Alexa use AI voice to understand and respond to user queries. In entertainment, AI generated voices, voice video game characters and dub foreign language content like on Netflix.

What is an AI voice?

AI voice refers to artificial intelligence powered technology that converts text into natural sounding human speech, a process also known as voice synthesis or text to speech (TTS).

AI voice systems use deep learning algorithms like neural networks, NLP and speech synthesis techniques to analyze nuances of human speech. Neural network AI algorithms are trained on massive datasets of recorded human speech to learn speech patterns and pronunciation. Natural language processing (NLP) AI algorithm helps the system understand context, grammar and meaning of the speech. Speech synthesis AI algorithms such as waveform generation, transform linguistic data into audible speech.

AI voice systems incorporate speech recognition that allows them to process spoken input and respond. The examples of AI speech recognition systems are Siri and Alexa. These AI technologies make AI voices to power AI applications from audiobook narration to customer service chatbots to make human machines more interactive.

How does AI voice work?

AI voice technology works through AI powered processes that include deep learning, text analysis, voice synthesis and natural language processing (NLP). These AI systems collaborate to transform text into realistic human speech with accurate intonation and emotional expression.

The process of how AI voice works is given below.

- Deep learning: AI deep learning employs neural networks trained on extensive AI speech datasets to recognize and replicate human speech patterns. This AI training enables accurate reproduction of pronunciation, rhythm and emotional tones in synthetic voices.

- Text analysis: AI text analysis processes input text through sophisticated AI algorithms that dissect linguistic components. This AI stage identifies grammar rules, sentence structures and emphasis points to proper pronunciation and natural pacing in the AI generated speech.

- Voice synthesis: AI voice synthesis utilizes advanced AI algorithms to convert analyzed text into audible speech. Modern AI techniques like neural vocoders generate lifelike waveforms that precisely mimic human vocal characteristics, with AI adjusting pitch, speed and tone dynamically.

- Natural language processing (NLP): AI NLP makes sure that the system's contextual understanding through AI powered language models. This AI component interprets slang, intent and conversational flow that allows the AI voice to adapt its delivery for natural, human like interactions.

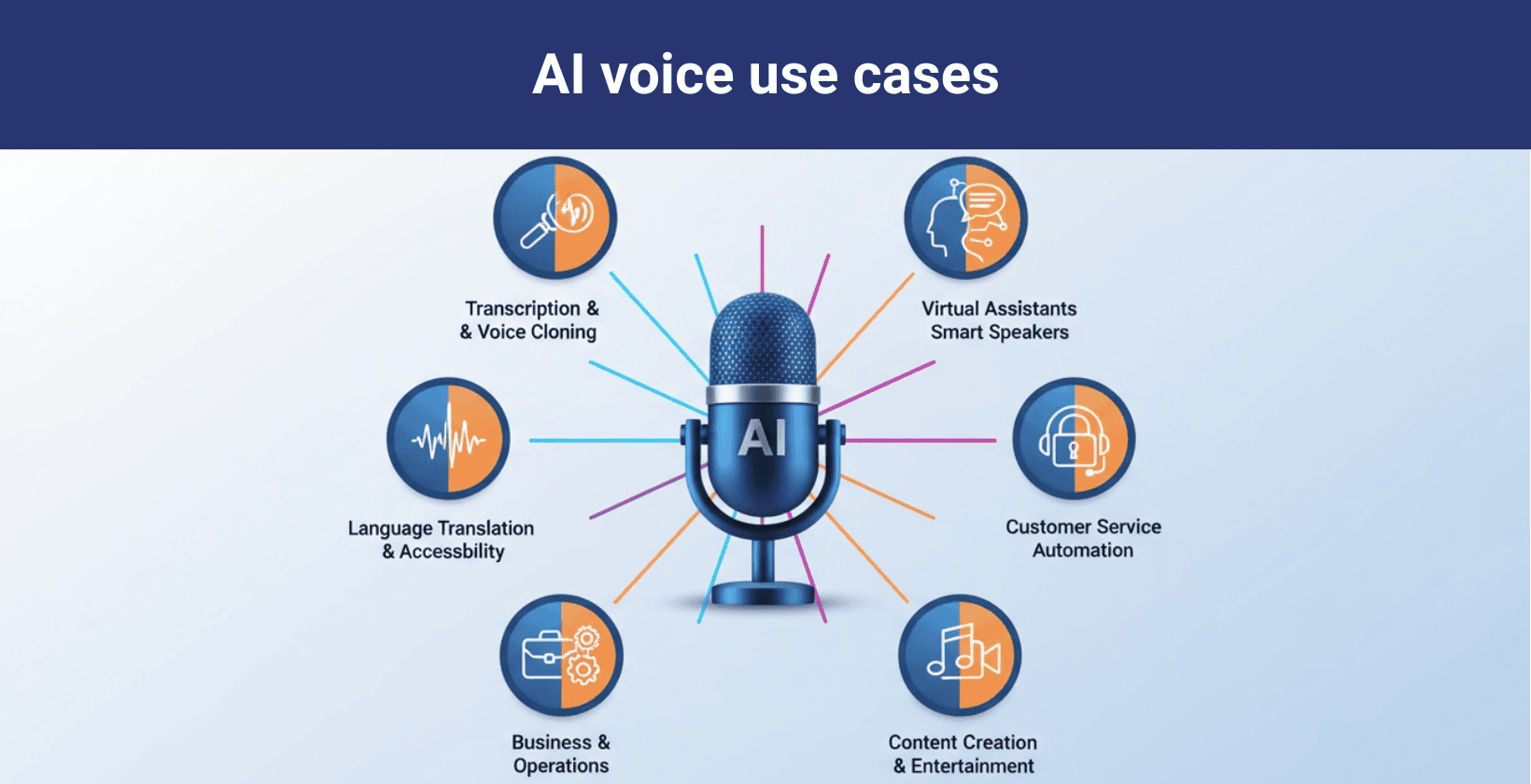

What are the applications of AI voice?

The applications of AI voice include virtual assistants, entertainment, accessibility solutions and content creation. This technology is transforming industries by allowing natural voice interactions across various platforms and services.

The applications of AI voice are outlined below.

- Virtual assistants and smart speakers: AI virtual assistants include Siri, Alexa and Google Assistant. These AI-powered systems use natural language processing to understand voice commands, answer questions, control smart home devices and perform tasks through conversational interfaces.

- Entertainment solutions: AI entertainment applications include character voices in games like Cyberpunk 2077, AI-dubbed movies on Netflix, and audiobook narration from services like Audible. By 2025, real-time AI dubbing is also widely used by YouTube and TikTok creators to instantly localize or stylize their content for global audiences.

- Accessibility tools: AI accessibility solutions include screen readers like VoiceOver and TalkBack, as well as voice-controlled interfaces for disabled users. These AI technologies convert text to speech to help visually impaired individuals access digital content.

- Voice cloning and personalization: AI voice cloning services include Resemble.ai, Descript, and VocaliD. These platforms allow users to create custom digital voices or replicate existing ones for personalized content creation and voice preservation. Increasingly, creators monetize these digital voices in podcasts, audiobooks, and social media content.

- Customer support automation: AI customer service solutions include voice bots from companies like Nuance, IBM Watson, and Google’s Contact Center AI. In 2025, large-scale AI call centers are being deployed across banks, airlines, and retail chains to manage high volumes of customer interactions with natural-sounding conversational AI.

- Marketing and advertising: AI marketing tools include personalized voice ads from platforms like Amazon Polly and WellSaid Labs. Brands use these AI solutions to create targeted, dynamic voiceovers that adapt to different customer segments.

- Business operations: AI business applications include Otter.ai for meeting transcription, Gong for sales call analysis, and Microsoft’s AI meeting assistants. These tools use voice AI to automate documentation and extract insights from conversations.

- Content creation: AI content tools include Murf.ai for video voiceovers, Play.ht for podcast narration, and Lovo.ai for social media content. These platforms enable creators to generate professional-quality voice content quickly, and many are now integrated directly into creator monetization workflows. Vosu.ai lets creators produce lip synced and AI videos with voice, avatars and music all in one platform.

- Education solutions: AI education tools include Duolingo’s voice exercises, speech recognition in language apps like Babbel, and AI reading assistants for students. These technologies enhance learning through interactive voice experiences.

What are the deployed technologies in AI voice systems?

The deployed technologies in AI voice systems include deep learning, automatic speech recognition, natural language processing and other advanced solutions that enable machines to understand, process and generate human like speech.

The technologies powering AI voice systems are given below.

- Deep learning and neural networks: Deep learning models like WaveNet and Tacotron 2 formed the foundation of modern AI voice systems. These early neural networks analyzed thousands of voice samples to learn speech patterns, enabling natural synthetic voice generation. Today, cutting-edge systems such as Microsoft’s VALL-E X (cross-lingual cloning), OpenAI Voice Engine (real-time TTS with emotional nuance), Meta’s Voicebox (multilingual, in-context speech), and Google AudioPaLM (unifying speech, text, and translation) push voice synthesis far beyond these early milestones.

- Automatic speech recognition (ASR): ASR technology powers voice assistants by converting spoken words into text. Advanced systems use acoustic and language models to achieve high accuracy in real world conditions.

- Natural language processing (NLP): NLP helps AI understand context and meaning in human speech. Modern models interpret complex queries and generate appropriate responses in conversational systems.

- Text to speech (TTS) synthesis: Modern TTS systems use neural networks to produce lifelike speech. These solutions adjust tone, pace and emotion to match different contexts.

- Machine learning algorithms: ML algorithms continuously improve voice AI performance by analyzing interaction data. They help systems to better recognize accents and adapt to user preferences.

- Voice cloning technology: Voice cloning solutions recreate a person's voice from sample audio. This technology is used for personalized assistants and digital voice preservation.

How to create an AI voice?

To create an AI voice, select a reliable platform, enter your text content, choose a voice profile, customize settings such as speed and pitch and generate the audio in various formats. This process makes professional quality voiceovers accessible to everyone.

5 steps to generate voice with AI are given below.

- Select an AI voice platform: Begin by selecting a multi-model AI platform like Vosu.ai which offers creators access to multiple leading AI voice models, including ElevenLabs and other top systems, in one platform. Users can generate voices, apply cloning, and integrate them directly into videos, avatars, and music projects without switching tools.

- Enter your text content: Type or paste the text you want converted to speech. Most platforms handle several thousand characters per generation. Well structured text produces the best vocal results.

- Choose a voice profile: Select from available voice options including different genders, ages and accents. Premium services offer celebrity voices or custom voice cloning capabilities.

- Customize voice settings: Adjust parameters like speed, pitch and emphasis to achieve your desired tone. Advanced tools allow word by word pronunciation edits and emotional inflection control.

- Generate and export audio: Process your text to create the voiceover. Most platforms provide instant generation and multiple export formats (MP3, WAV) suitable for various applications.

What are the benefits of using AI voice?

The benefits of using AI voice are cost and time efficiency, accessibility improvements and multilingual capabilities, which make it a transformative technology for businesses and content creators.

The advantages of using AI voice technology are outlined below.

- Cost and time efficiency: AI voice solutions reduce production expenses by eliminating studio costs and actor fees while delivering instant results that would normally require lengthy recording sessions.

- Accessibility and inclusivity: The technology provides enhanced accessibility features, which allow visually impaired users to interact with digital content through high quality screen readers and voice navigation systems.

- Scalability improvements: Businesses generate unlimited voice content without quality degradation that allows effortless scaling of audio materials for global campaigns or growing customer bases.

- Productivity enhancements: Organizations achieve greater operational efficiency by automating voice related tasks, from customer service interactions to audio content production and documentation.

- Brand customization options: Companies develop distinctive audio identities through customizable voice parameters that maintain consistent branding across all customer communication channels.

- Customer experience upgrades: AI voice interfaces deliver natural, 24/7 customer support with personalized responses that reduce wait times and improve satisfaction metrics.

- Multilingual capabilities: The technology supports multiple languages and accents, which removes traditional barriers to global communication without requiring expensive translation services.

What are the ethical considerations for using AI voice?

The ethical considerations for using AI voice include authenticity concerns, privacy issues, potential bias and accountability challenges that require addressing as this technology advances.

The ethical considerations for AI voice are given below.

- Authenticity and misinformation: AI voice raises concerns about impersonation through deepfakes and potential misuse in misinformation campaigns. The technology’s ability to mimic voices perfectly creates challenges for verifying authenticity and maintaining trust in digital communications. These risks are no longer theoretical. AI voice cloning was deployed during several 2024 to 2025 political campaigns to produce misleading audio, underscoring the urgency of disclosure and monitoring.

- Privacy and consent: Issues emerge around data collection practices, unauthorized voice cloning, and questions about who maintains ownership and control of synthesized voices. Many AI voice platforms, including ElevenLabs, now require verified consent from individuals before cloning, setting new standards for ethical use. Proper consent mechanisms have become critical for compliance and public trust.

- Bias and fairness: AI voice systems perpetuate data bias if trained on limited datasets, favoring certain accents or demographics. These limitations can affect inclusivity, leaving underrepresented populations with less reliable experiences. Ethical adoption requires continuous dataset diversification and equal access to advanced AI voice technologies across different regions and user groups.

- Accountability and oversight: The lack of transparency in some AI voice systems creates challenges for determining responsibility when issues arise. Governments and regulators are beginning to step in and the EU AI Act now explicitly requires disclosure when AI-generated voices are used in deepfakes or impersonations. This regulatory pressure is pushing developers to adopt clearer accountability frameworks and audit mechanisms for voice AI deployment.

Is AI voice cloning illegal?

AI voice cloning is not outright illegal, its legality depends on consent, purpose, and regional laws. Authorized use for accessibility, voice preservation, or creative content is lawful, while unauthorized cloning for fraud or impersonation violates privacy and intellectual property rights.

From a copyright and monetization perspective, AI-only generated voices are not copyrightable in the US and EU, though human-guided edits may qualify. Platforms like WellSaid, Murf, and ElevenLabs grant usage licenses rather than ownership, and since 2024 YouTube explicitly allows AI voices in monetized content as long as the work is original and policy-compliant.

Is AI voice copyright free?

No, AI voice is not copyright free because its legal status depends on human involvement and licensing terms. Its copyright requires "human authorship," as AI generated voices lack protection and get copyright. AI voice platforms like WellSaid Labs impose AI voice usage restrictions that means users do not own the voices outright.

Can AI voices be monetized?

Yes, AI voices can be monetized on platforms like YouTube when the content is original and complies with the platform's monetization and community guidelines. YouTube allows the use of AI voiceovers, but videos demonstrate originality and value repetitive, mass produced or low effort AI generated content.

Can I create an AI voice for myself?

Yes, you can create an AI voice of yourself using modern AI voice cloning technologies like NLP. These AI tools analyze recordings of your voice to generate a digital replica that sounds just like you. It provides you to synthesize speech, record voiceovers, or personalize digital content with your own unique AI generated voice.

Will AI replace voice-overs?

Yes, AI will replace some voiceover work, for simple, repetitive or low budget projects, due to its efficiency and cost effectiveness. AI is unlikely to fully replace human voiceover artists for roles requiring deep emotion, nuance or creative interpretation.

However, the industry is moving toward unified platforms. Vosu.ai already reflects this shift by integrating multiple frontier voice models, advanced cloning and cross-media creation in a single hub.

What is the difference between human voice and AI voice?

The difference between human and AI voices depends on authenticity, adaptability and emotional depth. The human voice, shaped by vocal cords and individual physiology, conveys genuine emotion, adapts fluidly to context and reflects rich social and cultural cues. An AI voice is generated through algorithms that offer consistency and realism but lack true emotional awareness and spontaneity.

Can you detect AI voices?

Yes, AI voices can be detected using specialized tools such as PlayHT Voice Classifier, ElevenLabs AI Speech Classifier, and Resemble AI, which analyze audio samples for patterns unique to synthetic speech.

Detection methods are improving but not perfect and skilled cloning can still bypass classifiers. Emerging solutions like DeepMind’s SynthID for audio watermarking (2024) and the latest ElevenLabs speech classifier (2025) represent major steps toward reliable detection, but accuracy continues to evolve with the technology.