Consistent characters are fictional or real individuals whose behavior, dialogue, and personality traits remain stable and predictable throughout a story or context. Consistent character creation in Stable Diffusion requires using a reference image to define the character, setting a fixed seed to stabilize results, and adding detailed prompts for control. It involves applying techniques like inpainting or ControlNet to correct inconsistencies and running experiments to optimize the output. Stable Diffusion also offers advanced methods like textual inversion, LoRA, and Dreambooth to improve consistency across generations.

For users who do not want to fine-tune models or learn LoRA or Dreambooth, platforms like Headshotly.ai offer a shortcut. Simply upload six selfies and the system automatically learns your face, generating 40+ consistent images in different poses, outfits, and styles. This makes it ideal for UGC creators, professional headshots, or quick character creation without the technical overhead.

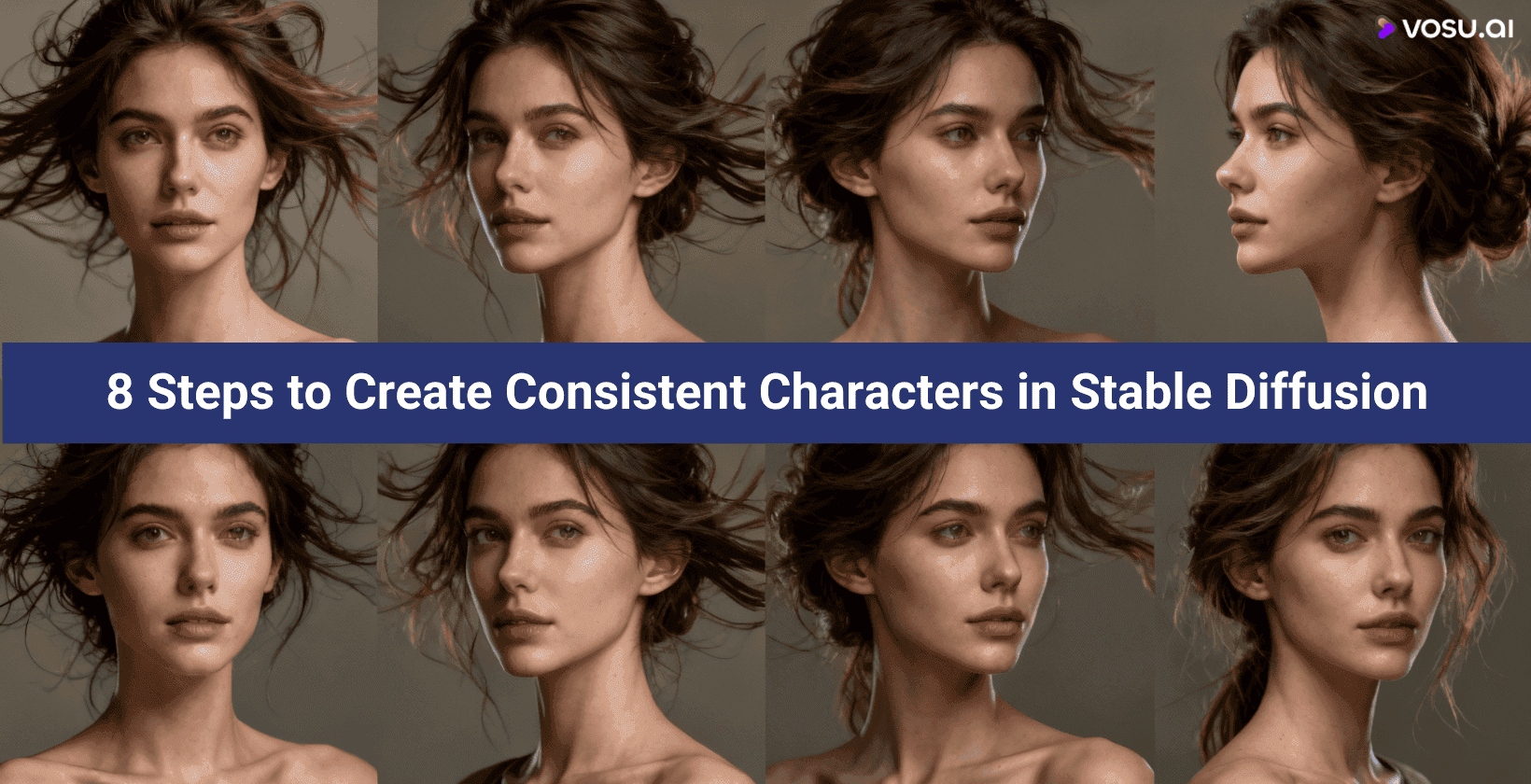

8 steps to create a consistent character in Stable Diffusion are listed below.

- Define standard character with reference image

- Set a fixed seed

- Add a detailed prompt

- Fixing your character

- Run experiments to optimize your character output.

- Use textual inversion

- Use LoRA

- Use Dreambooth

1. Define standard character with reference image

You have to define a standard character in Stable Diffusion by providing a reference image that clearly displays the character’s appearance. Stable Diffusion captures key features like hairstyle, facial shape and attire from the reference image to confirm character identity preservation. The reference image acts as a constant guide for maintaining visual character consistency, no matter how the prompt or scene changes.

2. Set a fixed seed

Stable Diffusion allows you to set a fixed seed by entering a chosen number in the seed box. This maintains character consistency by using the same seed value and prompt so that repeated image generation produces the same result. The seed and prompt interplay controls how the model interprets instructions like generating consistent poses, expressions or backgrounds, which makes a fixed seed essential for consistent character appearance across multiple outputs.

3. Add a detailed prompt

A detailed prompt is a well designed instruction that guides an AI to generate results aligned with user intent. It includes the subject, style, environment and uses additional specific keywords for clarity and better control.

An example of a detailed prompt is given below.

The designer should create an image of a tall, adventurous boy with messy black hair, wearing a red jacket and blue jeans, standing in a sunlit forest. Visual aesthetics: vibrant, lively colors. Negative prompts: avoiding unwanted elements like glasses, backpacks, or animals.

This prompt defines the character’s appearance, apparel and environment while visual aesthetics guidance shapes the overall look and mood. Negative prompts help in avoiding unwanted elements that could disrupt consistency. Prompt effectiveness increases when you specify the subject and style, use descriptive language, include relevant modifiers, avoid ambiguity and add negative terms to filter results.

4. Fix your character

Stable Diffusion allows you to fix your character using tools and techniques such as inpainting, ControlNet and upscaling that address accuracy and appearance. Inpainting is a key method for fixing errors or inconsistencies in areas like hands, clothing or facial expressions that helps preserve consistent facial features. ControlNet supports a consistent character generation workflow by guiding poses and proportions to maintain a uniform character appearance across images.

Image quality enhancement is achieved through upscaling and using detailers, which improve sharpness and clarity to make sure the final character looks polished. Prompting techniques such as delaying character appearance in the prompt structure or being more precise about desired traits help further refine characters and fix minor visual issues that result in high quality and consistent outcomes.

5. Run experiments to optimize your character output

An experiment to optimize your character output is important because it helps determine which approaches generate the most authentic and consistent responses. Reference materials like sample dialogues and thorough character profiles provide objective standards for evaluating results that make it easier to track progress and confirm character consistency. These materials set the stage for meaningful comparison during the optimization process.

Stable Diffusion uses conditioning methods such as prompt embeddings, cross-attention and gradient based adjustments to optimize character output. This approach controls features, refines style and ensures consistency during image generation. It optimizes character output by adjusting settings like temperature, token limits and repetition penalties to control creativity, length and consistency. It generates and evaluates variants to optimize character output.

6. Use textual inversion

Textual inversion in Stable Diffusion teaches the model new visual concepts or character styles through a training embedding process with curated images. It generates embeddings that represent these concepts, which are distributed on the Civitai platform (embedding sharing). It allows you to maintain consistent characters by referencing embeddings in your prompts and using negative prompts to minimize visual artifacts such as blurry images, distorted limbs and duplicated features.

7. Use LoRA

LoRA in Stable Diffusion is a technique for efficient model fine tuning that inserts low rank adaptation modules into pretrained networks, which allows rapid learning of new visual concepts. It works by adjusting selected network layers while preserving the base model that minimizes resource use. This approach keeps LoRA files small, maintains powerful adaptation capabilities and proves useful for generating multiple character variations. LoRA is processed by training on a curated dataset of 30–40 varied character images, followed by fine tuning and iteration to ensure consistent character generation in Stable Diffusion.

8. Use dreambooth

Dreambooth is an advanced consistency technique for Stable Diffusion, designed to create consistent characters by fine tuning the entire model checkpoint on a set of quality reference images. It works by encoding unique visual features of a subject that allow the model to reproduce the character’s appearance across different prompts. DreamBooth’s advantage lies in its ability to preserve identity details and replicate appearance across prompts, which makes it ideal for generating consistent characters.

What are the challenges of creating a consistent character in Stable Diffusion?

The challenges of creating consistent character in Stable Diffusion are rooted in facial and body inconsistencies, style and pose variations, background randomness, model unpredictability and inherent training data biases that disrupt accurate character replication across images.

The challenges of creating a consistent character in Stable Diffusion are given below.

- Facial and body consistency: Stable Diffusion introduces subtle changes to faces and body shapes between generations, which makes it challenging to preserve a character’s unique appearance across images.

- Clothing and style: Stable Diffusion finds it difficult to maintain overall character style due to inconsistencies in clothing details such as fabric texture and pattern variations.

- Environmental variations: Stable Diffusion generates different backgrounds and lighting for each prompt that disrupts character consistency.

- Posing variations: Stable Diffusion frequently alters poses and gestures, which leads to inconsistent character anatomy and makes it difficult to reliably replicate specific actions.

- Model variability: Stable Diffusion is heavily influenced by model randomness. This leads to image to image variations even with identical prompts or seeds.

- Model limitations: Stable Diffusion is limited by biases in its training data that alter or misrepresent character details beyond user control.

- Perfect consistency: Stable Diffusion often fails to recreate character traits accurately when handling complex prompts with multiple visual features and style elements.

What are the common issues with Stable Diffusion?

The common issues with Stable Diffusion are given below.

- Bias and discrimination: Stable Diffusion produces biased outputs due to biased training data, which leads to unfair or discriminatory results.

- Complex prompt handling and inconsistencies: Stable Diffusion struggles with nuanced or ambiguous prompts and produces images that do not align with the user's intent.

- Resource intensive: Stable Diffusion requires high computational resources for both training and inference, which restricts accessibility.

- Fingers and other anatomical issues: Stable Diffusion generates images that have visible anatomical flaws like distorted fingers or unrealistic body parts, which reduce overall quality.

- Model loading and dependency issues: Stable Diffusion requires workarounds for dependency issues or model loading errors that complicate deployment and usage.

- Misuse and ethical concerns: Stable Diffusion is vulnerable to misuse for generating harmful or unethical images, raising substantial ethical and legal concerns in the AI community.

What is the character limit for Stable Diffusion?

Stable Diffusion has a character limit of 350 to 380 characters per prompt, due to a cap of 75 tokens set by the prompt processing and tokenization system. This limit arises from the numerical representation of text in prompts, with some interfaces employing extended token limits.

Can Vosu.ai generate consistent characters?

Yes, Vosu.ai can generate consistent characters because it supports uploading character reference images and preserves appearance throughout different video scenes. Vosu.ai uses its multi-model approach to keep key features like facial details, clothing and style visually coherent, which makes it suitable for projects that require recognizable characters or virtual influencers.

Can MidJourney create consistent characters?

Yes, MidJourney can create consistent characters because it provides tools to carry character traits across images. It allows users to host images for URL usage and adjust the character weight (cw) parameter by experimenting with prompts. MidJourney helps users to achieve visual narrative consistency by portraying the same character with similar features in different scenarios.

Can you generate anything with Stable Diffusion?

No, you can not generate anything with Stable Diffusion because it enforces ethical and technical constraints to prevent misuse. Stable Diffusion features customizable code that allows the creation of realistic images and editing existing images with text prompts. It has built in restrictions that block certain content like explicit or copyrighted material, which limits full creative freedom.

Are Stable Diffusion models censored?

Yes, Stable Diffusion models are censored because they include built in safety filters to prevent explicit content and adhere to ethical and legal guidelines. These models have bypass options for advanced users, but the default safeguards ensure generated outputs stay within acceptable boundaries.

Are there alternatives to Stable Diffusion?

Yes, there are alternatives to Stable Diffusion like Headshotly.ai, MidJourney and DALL·E. If your goal is consistent characters, Headshotly.ai specializes in turning selfies into professional-grade, consistent AI models, while MidJourney or DALL·E focus more on creative scene generation. Stable Diffusion competitors also include free-to-use AI image generators and professional AI tools with unique features like Creative Cloud integration. These options vary in model pricing and usage limitations, which help users choose tools that best fit their creative needs.ng and usage limitations, which help users choose tools that best fit their creative needs.