Visual effects in film are an essential part of modern storytelling which allows creators to portray scenes that go beyond the limits of live action filming. Visual effects are used to improve, manipulate or generate imagery that is impossible, unsafe or too costly to capture on set in movies. These techniques blend real world footage with digital elements to create immersive cinematic experiences. There are several types of visual effects, such as computer generated imagery (CGI), compositing, motion capture, matte painting and simulation effects. Each type serves a unique purpose, from creating digital creatures and environments to simulating realistic fire, smoke or fabric movement.

VFX is widely used in film, television, gaming, animation, advertising, architecture, automotive design and medicine. These industries use visual effects to improve realism and communicate complex ideas. The demand for skilled professionals and specialized tools continues to grow because of this broad application. Some of the leading software for visual effects includes Nuke, Adobe After Effects, Fusion, Maya, Blender, Houdini and ZBrush. These programs offer powerful tools for 3D modeling, compositing, animation and simulation. Generative AI has also entered the VFX workflow, with platforms like Vosu.ai allowing fast creation of AI generated visuals that support traditional pipelines. The future of VFX is being shaped by artificial intelligence, real time rendering and virtual production. These innovations streamline workflows, lower production costs and realism which makes visual effects accessible across creative industries.

What is VFX?

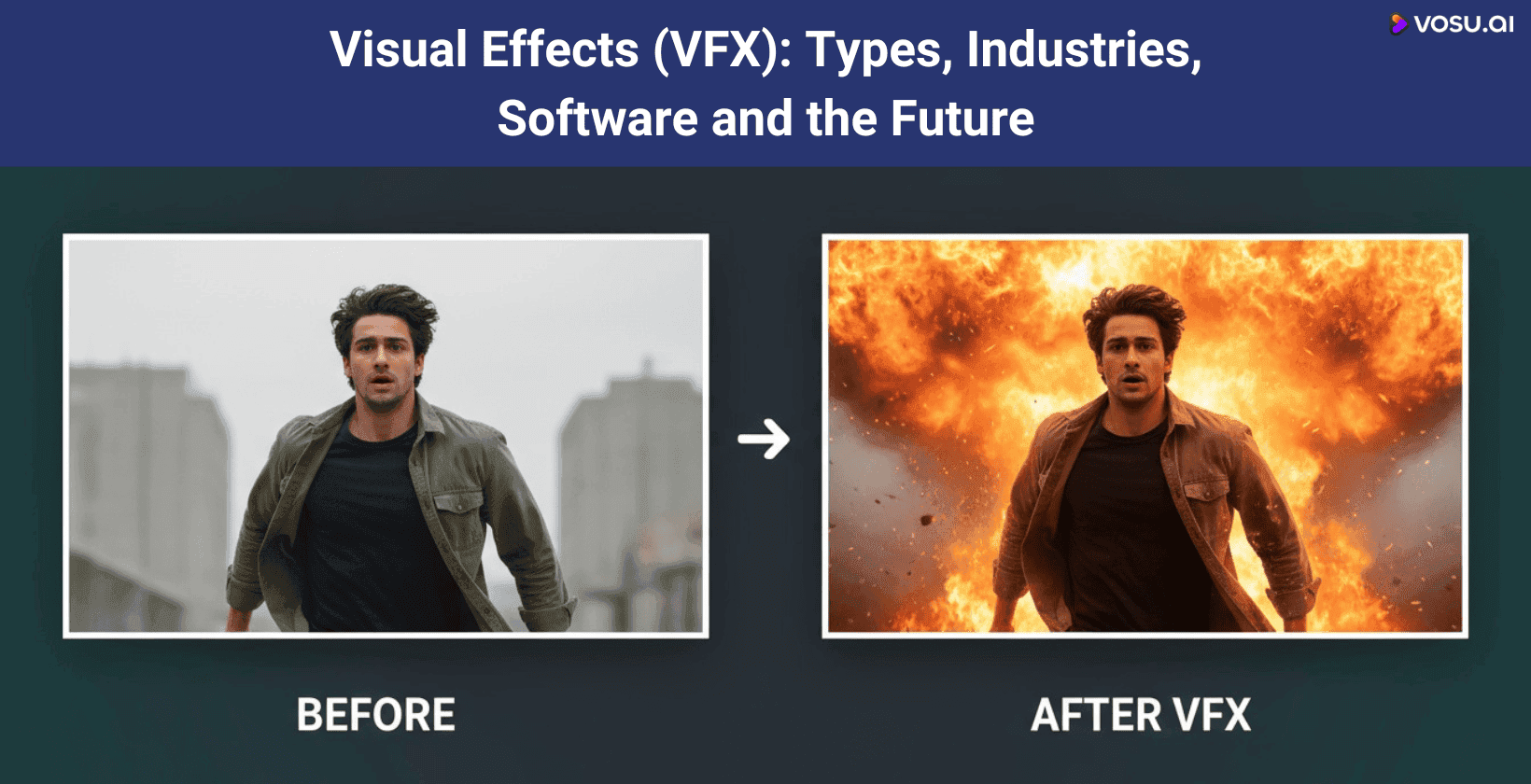

VFX or visual effects refer to techniques used to create, manipulate or improve imagery for film and other moving media when practical filming is not possible. This involves combining live action footage with digitally generated elements, such as computer generated imagery (CGI), to produce visuals that are impossible, dangerous or too expensive to capture in real life.

Visual Effects (VFX) originated in the 19th century with early photographic tricks and evolved through practical effects in silent films, such as Georges Méliès' use of stop tricks and multiple exposures. VFX techniques advanced over time to include matte paintings, stop-motion animation and compositing methods. The introduction of computer-generated imagery (CGI) in the late 20th century revolutionized VFX and it enabled the creation of complex digital effects and virtual worlds.

Visual effects in film have become essential to modern storytelling which allows directors to bring imaginative concepts and ambitious visions to life. VFX artists construct environments, characters and scenarios that go far beyond the limitations of physical sets by blending digital technologies with traditional cinematic techniques. VFX in film expands the creative possibilities of the medium, whether adding realistic monsters to an action scene which transforms everyday locations into magical landscapes or staging large scale destruction.

Visual effects in movies also include more subtle elements, such as extending backgrounds, adjusting lighting or adding atmospheric detail which remain invisible to the viewer. These seamless improvements help make the extraordinary feel authentic which draws audiences deeper into the story. Today, VFX are not just tools for spectacle, they are a vital part of the filmmaking process which shapes immersive and emotionally resonant cinematic experiences.

What are the types of VFX?

The types of VFX are computer generated imagery (CGI), compositing, motion capture, matte painting and simulation effects.

5 types of VFX are outlined below.

1. Computer generated imagery (CGI)

2. Compositing

3. Motion capture

4. Matte painting

5. Simulation effects

1. Computer generated imagery (CGI)

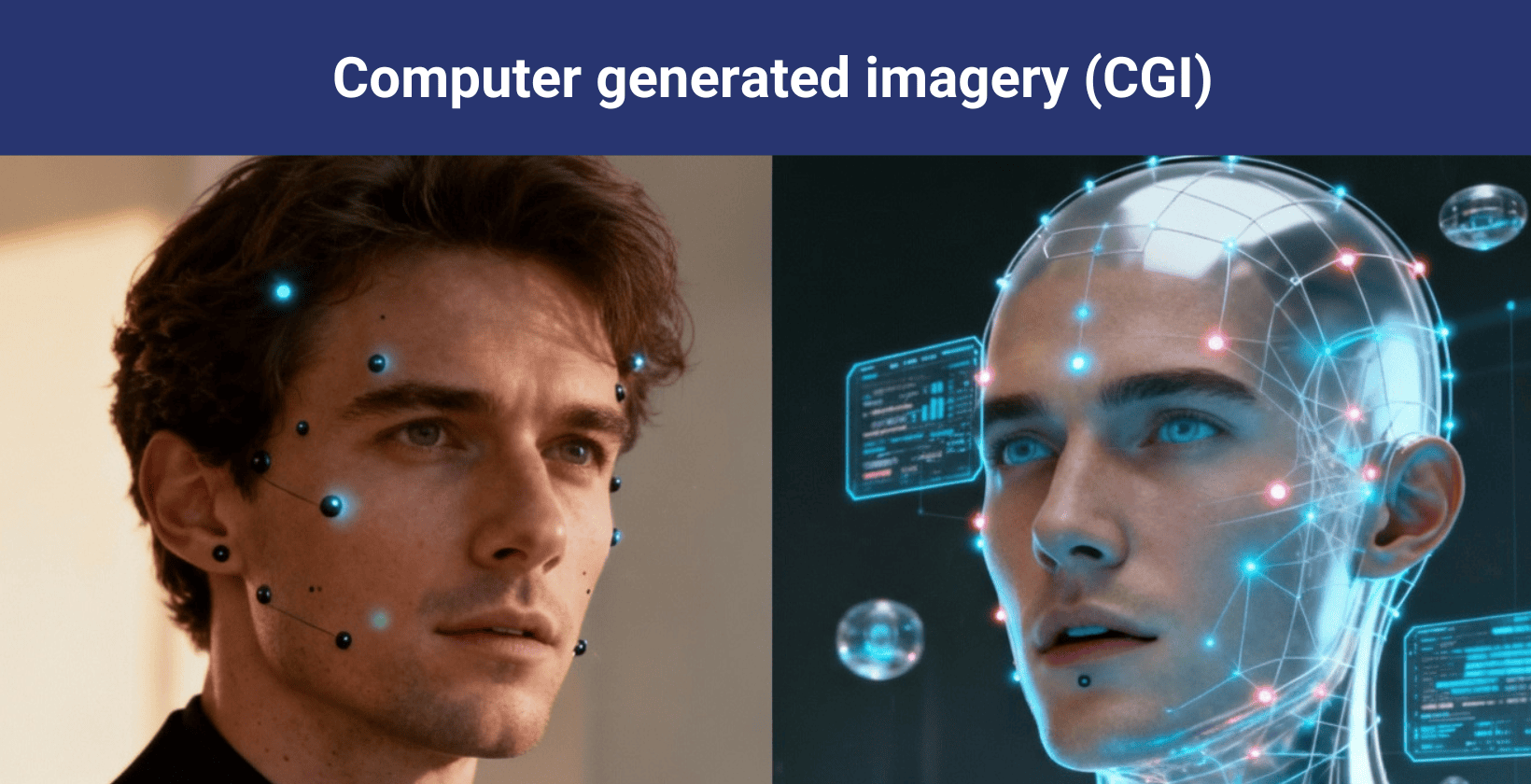

Computer generated imagery (CGI) refers to the creation of digitally created images, both static and moving, using computer software. These images allow for visuals that are not easily produced by traditional methods. CGI is widely used in movies, television and video games to generate everything from fantastical creatures and imaginative worlds to photorealistic environments and subtle improvements as the backbone of modern visual effects.

The CGI process begins with 3D modeling, where digital representations of characters or objects are crafted. These models are then textured to add color and surface detail, equipped with digital skeletons for movement and animated using keyframes or performance capture to create lifelike motion. Lighting and rendering simulate how virtual light interacts with surfaces which produces realistic shading and reflections before all data is processed into final images or frames.

CGI’s versatility within visual effects is unmatched. It brings epic monsters to life in blockbuster films, constructs immersive digital landscapes in video games. It also improves dramatic scenes in television and delivers striking, digitally created images for advertisements. CGI authorizes creators to turn imagination into reality, whether used for subtle background additions or entire animated worlds which makes it a fundamental tool in visual storytelling across all forms of digital media.

2. Compositing

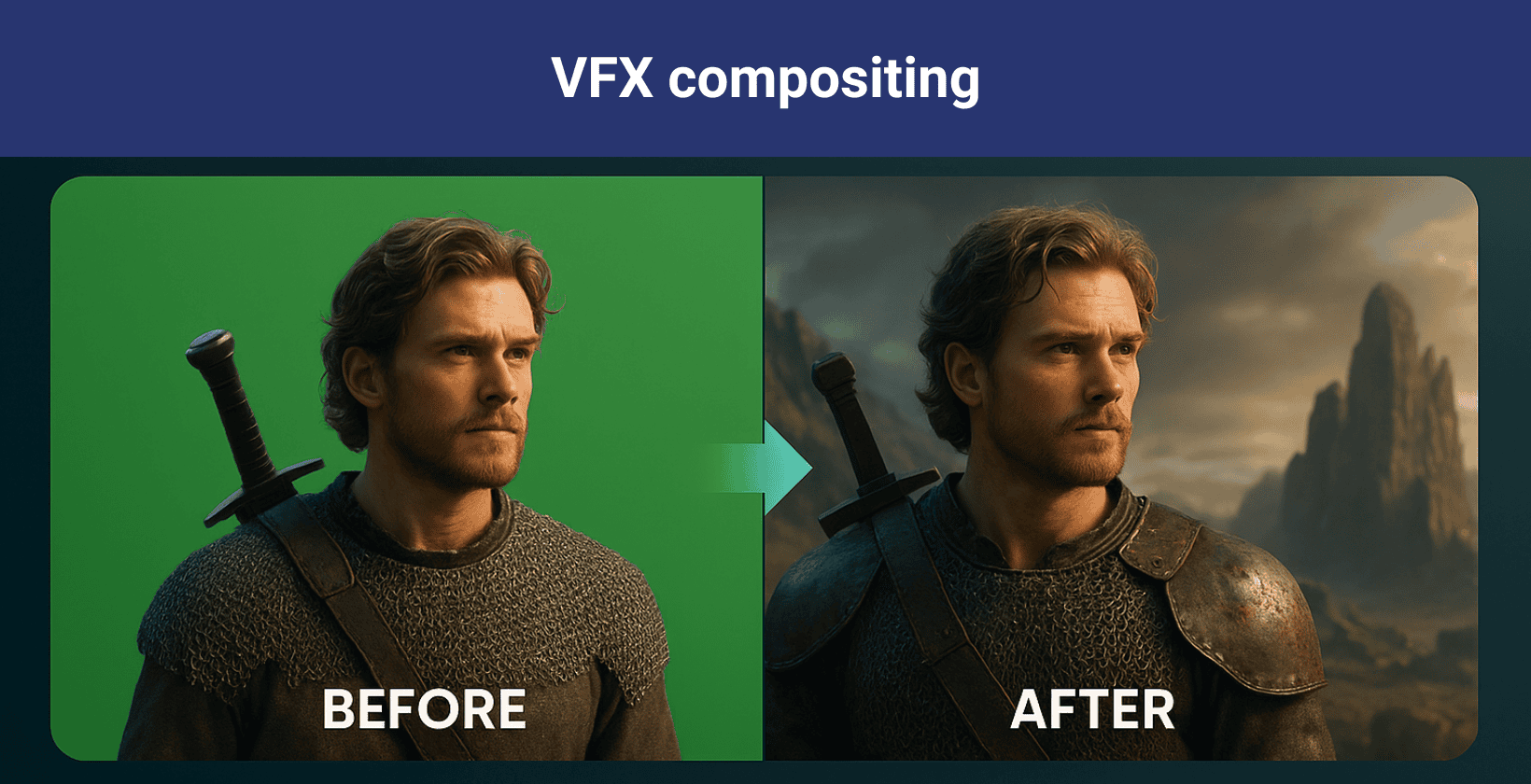

Compositing is the process of combining multiple visual elements from different origins into a unified image to make them appear as if they naturally belong to the same scene. It involves combining multiple visual elements, such as live action footage, digitally created images, matte paintings and other assets, into a single, seamless image. This process is essential in VFX and compositing which allows filmmakers to create visuals that are impossible or impractical to capture directly on set. Artists carefully match lighting, color and perspective to assure the added elements blend naturally with the original live action footage.

Key techniques of compositing include chroma keying, rotoscoping and digital cleanup. Chroma keying replaces green or blue screens with digital backgrounds. Rotoscoping is used to isolate or remove subjects frame by frame. Matte painting inserts a detailed, imaginary environment and sand digital clean up, which removes unwanted objects like wires or rigs. Modern compositing relies on advanced software such as Nuke, After Effects and Fusion, along with emerging AI powered tools like vosu.ai that improve precision and speed in tasks such as cleanup and background replacement. These technologies allow artists to achieve photorealistic visuals and seamlessly blend real and digital elements to improve the overall storytelling.

3. Motion capture

Motion capture or mocap, is a technique used in visual effects (VFX) to digitally record the movements of actors or objects and apply that data to computer generated characters or digital elements. Actors wear suits fitted with sensors or reflective markers, while multiple cameras or tracking systems capture their exact movements from different angles. This data is processed using specialized software which allows CGI characters to mimic the actor’s physical actions and facial expressions with high precision.

Motion capture improves realism by allowing digital characters to move and represent like real people in the broader context of VFX and compositing. Thus, improving the integration between live action footage and CGI. It is also an efficient solution which reduces the need for frame by frame animation and assures consistent motion across scenes. Mocap bridges real world performance with digital environments as part of combining multiple visual elements which makes the final visuals more believable. It contributes to cost effective production, in large scale digital sequences which helps filmmakers manage complex shots while maintaining visual quality.

4. Matte painting

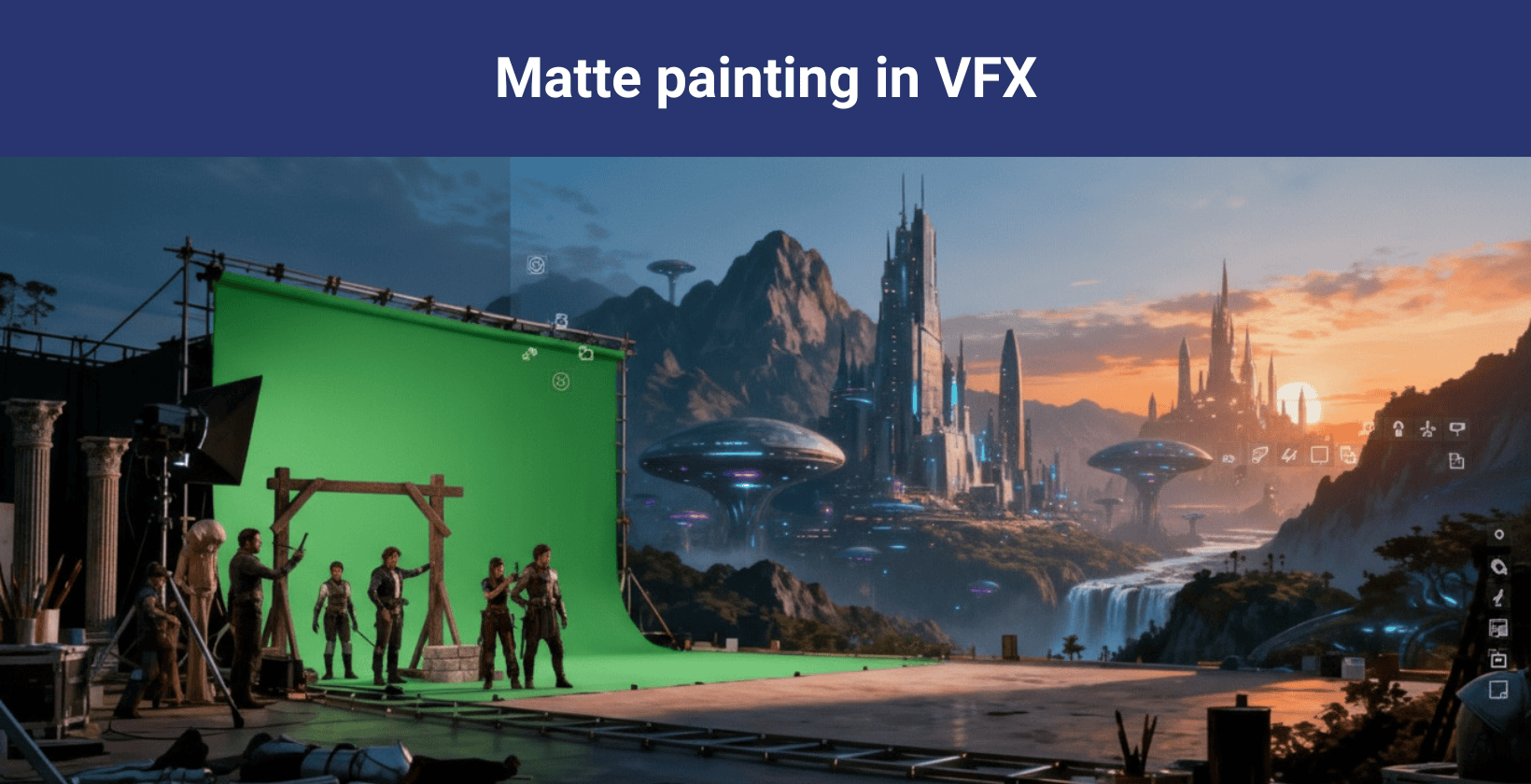

Matte painting is a visual effects (VFX) technique used to create painted or digitally created backgrounds that extend or replace real environments in a scene. It is used to create environments that are too vast, complex or fantastical to build or film, such as ancient cities, alien worlds or massive landscapes. The core purpose of matte painting is to support cinematic storytelling by providing detailed settings that improve the illusion of depth and realism which immerses viewers in a believable on-screen world. Matte paintings were created by hand on glass or canvas, but modern productions now use digital matte painting (DMP) which involves designing high resolution, photorealistic images using specialized software. The process begins with conceptual sketches, followed by painting, digital texturing and the application of lighting and perspective adjustments.

Digital matte animated to simulate environmental movement. Integration with live action footage is achieved through compositing, where the matte is seamlessly layered behind or around filmed elements. Artists use color grading, digital lighting and parallax effects to make sure the matte painting aligns with real world elements which creates a cohesive visual blend. This fusion of the physical and the digital makes matte painting an essential part of VFX pipelines which allows filmmakers to construct expansive, cinematic worlds with precision and creativity.

5. Simulation effects

Simulation effects are computer generated effects used in visual effects (VFX) to recreate complex natural phenomena and dynamic interactions that are too difficult or impossible to film in real life. These effects rely on physics based algorithms and digital models to make sure realism and precision. Simulation effects cover core types such as dynamic simulations, particle simulations, rigid body dynamics, soft body simulations, fluid simulations and cloth simulations.

Dynamic simulations capture movement and interactions over time. Particle simulations imitate elements like dust, rain or sparks. Rigid body dynamics which simulate the behavior of solid objects breaking or colliding, such as collapsing buildings. Soft body simulations for deformable materials like flesh or jelly. Fluid simulations for realistic water, fire and smoke and cloth simulations for lifelike fabric movement.

Simulation effects are used to add realism, control and spectacle to scenes by accurately mimicking unpredictable physical behavior while offering creative flexibility. The example of simulation includes crashing ocean waves, explosive destruction, flowing hair and fur on digital characters, swirling smoke, burning fires and fluttering clothing, all contributing to immersive and visually rich film sequences.

Which industries use VFX?

The industries that use VFX are film and television, gaming, animation, advertising, architecture, medicine and automotive.

The industries that use VFX are outlined below.

- Film and television: VFX brings to life spectacular scenes, creatures, environments and effects that are impractical or impossible to capture on camera which improves storytelling, world building and visual immersion for audiences.

- Gaming: VFX creates dynamic gameplay elements, such as explosions, weather effects, energy blasts and character powers which elevate visual realism, interactivity and player engagement through immersive and responsive virtual worlds.

- Animation: VFX assists in producing complex animated effects, from natural phenomena to fantastical magic which enrich visual narratives and allow creativity beyond traditional hand drawn or computer generated sequences.

- Advertising: VFX transforms commercials with eye-catching visuals, product demonstrations and digital improvements which helps brands stand out, communicate messages clearly and invent imaginative, memorable campaigns.

- Virtual and augmented reality (VR/AR): VFX is used extensively in the VR/AR industry to create immersive, realistic and interactive digital environments which involve techniques like motion capture, CGI (computer-generated imagery), dynamic lighting and photorealistic rendering. They build believable virtual worlds that users can explore and interact with.

- Metaverse content creation: VFX is widely used in metaverse content creation to build immersive virtual worlds, realistic avatars and interactive environments. They enhance virtual and augmented reality experiences by creating photorealistic textures, animations and environmental effects that improve user interaction and storytelling.

- Education/training simulations: Visual effects (VFX) are used in the education and training simulations industry to create engaging and interactive learning experiences by simulating realistic environments and scenarios that are difficult, expensive, or unsafe to reproduce in real life.

- Medicine: VFX is used for detailed medical visualizations, surgical simulations and educational animations which facilitate training, patient education and remote learning by illustrating complex biological processes in accessible ways.

What are the best VFX software tools?

The best VFX software tools are Nuke, Adobe After Effects, Fusion, Maya, Blender, Houdini, ZBrush, Unreal Engine, Cinema, Substance Painter and Vosu.ai.

The best VFX software tools are outlined below.

- Nuke: Nuke is a leading node based VFX compositing tool, designed for high-end VFX in film and TV which provides advanced compositing, 3D integration and collaborative VFX workflows for major studios.

- Adobe After Effects: Adobe After Effects is widely used for VFX work which allows artists to create motion graphics, animation and dynamic VFX improvements. It makes it an essential tool across varied VFX and post production environments.

- Fusion: Fusion is a professional VFX compositor with a node based interface, specialized for creating complex VFX, integrating 3D VFX and delivering visual effects for film, TV and VR content.

- Maya: Maya is recognized as a standard VFX software, extensively used for creating 3D VFX such as character animation, visual effects simulations and high quality assets in VFX heavy productions.

- Blender: Blender is an open source VFX software suite that supports end-to-end VFX creation with 3D modeling, animation, visual effects compositing and improving VFX projects for indie artists and professionals.

- Houdini: Houdini is known for advanced procedural VFX, and is a staple in VFX production pipelines. It excels at simulations, complex VFX for destruction, fluids and dynamic effects in blockbuster VFX projects.

- ZBrush: ZBrush is vital for sculpting high detail assets in VFX, supporting the modeling of characters and creatures that are integrated into VFX scenes for movies, TV and games.

- Unreal Engine: Unreal Engine excels in delivering cutting-edge real-time VFX with revolutionary rendering, lighting, particle and animation systems designed to empower creators in games, film and virtual production in 2025.

- Cinema 4D: Cinema 4D excels in combining user-friendly workflows with professional-grade features for 3D modeling, animation, simulations and rendering, which makes it a top VFX software tool for creators in 2025.

- Substance Painter: Substance Painter remains essential for 3D texture painting in VFX, with 2025 updates focused on precision tools, workflow automation and broad system compatibility that makes it highly effective for detailed material creation in VFX projects.

- Vosu.ai: Vosu.ai is an AI-powered generative VFX platform specializing in character animation and video generation. It delivers Hollywood quality full body character replacements with advanced lip-sync, consistent lighting and realistic body dynamics for production ready visual effects.

What VFX software is used in Hollywood?

Hollywood’s go to VFX software includes Autodesk Maya (3D modeling, animation), Houdini (procedural effects, simulations), Nuke (node based compositing), Adobe After Effects (motion graphics, compositing) and Autodesk 3ds Max (modeling, rendering). These tools offer advanced capabilities for 3D animation, realistic simulations, complex compositing and seamless integration into production pipelines which support blockbuster quality visual effects.

What VFX software does Marvel use?

Marvel uses industry-standard VFX softwares including Autodesk Maya (3D modeling and animation), Houdini (procedural and simulation effects), Nuke (compositing), ZBrush (sculpting), and Substance Painter and Mari (texturing). These are complemented by additional software such as Photoshop, Unreal Engine, and 3ds Max, according to interviews with Marvel VFX supervisors and editors like Anedra Edwards. Marvel does not rely on a single software or vendor for its visual effects. It contracts dozens of VFX studios worldwide, such as Industrial Light & Magic (ILM), Weta Digital, Framestore and Digital Domain, each with its own pipeline and specialized tools.

What is the best CGI software?

The best CGI software for beginners is Blender. It stands out because it is free, open source and offers a vast array of professional grade tools, including modeling, sculpting, animation and rendering. Blender’s intuitive interface and strong global community make learning accessible, while regular updates and extensive tutorials support users at every skill level and for nearly any CGI task.

What is a visual effects artist?

A visual effects (VFX) artist is a professional who creates digital imagery and special effects for movies, television, video games and other media. Their core responsibilities include designing, animating and integrating computer generated elements with live action footage. It improves scenes with visual effects like explosions, creatures or environments and ensures seamless, realistic results that serve the narrative.

Are VFX and SFX the same?

No, VFX and SFX are not the same, as visual effects (VFX) are created digitally in post production to improve or manipulate imagery. Special effects (SFX) involve practical effects done physically on set during filming, such as explosions or prosthetics. VFX happens after shooting, whereas SFX is performed live during production. SFX and VFX are combined in many productions, as a practical explosion (SFX) filmed on set can be enhanced or extended with digital fire or smoke effects (VFX) in post-production to achieve a more dramatic and controlled result.

What is the difference between animation and VFX?

The core difference is that animation creates moving images and sequences entirely from scratch, while VFX (visual effects) manipulates live action shots by integrating computer generated effects. Animation builds whole worlds, characters and objects digitally, whereas VFX blends digital elements with real footage to achieve realism or impossible effects, commonly used in films to amplify live scenes.

How is AI used in VFX?

AI is revolutionizing the VFX industry by automating repetitive tasks like rotoscoping, masking and motion capture, thus freeing artists for more creative work. AI in VFX encompasses generative creation of environments, smart image enhancement and cleanup, sophisticated facial effects including de-aging and real-time replacement and smarter pre-production visualization and storyboarding tools. AI powered VFX platforms like Runway Gen-3 Alpha/Gen-4 and VosuAI enable creators to take still frames from live-action footage and then use text-to-video or image-to-video AI models to generate various visual effects such as explosions, transformations, new characters, or atmospheric elements. These technologies are already deployed in major productions like The Mandalorian and Indiana Jones and the Dial of Destiny, revolutionizing visual storytelling by making it faster, more flexible, significantly cost reduced and creatively expansive.

Is VFX going to be replaced by AI?

No, VFX is not going to be replaced by AI, while AI is transforming visual effects by automating repetitive processes and accelerating workflows, human VFX artists are still essential for creativity, complex problem solving and artistic decision making. AI serves as a powerful tool that improves but does not replace the role of skilled VFX professionals in the industry.

What is the future of VFX?

The future of VFX is driven by AI powered tools that automate complex tasks, boost efficiency and unlock new creative possibilities. It highlights generative visual effects GVFX and the biggest trend beyond automation is AI models becoming essential tools within VFX departments. AI is transforming key workflows by enabling quick pre-visualization (pre-viz) with AI-generated shots, generating detailed backgrounds, skies and stylized visual looks and Iterating concept shots efficiently without requiring long times.

Virtual production with LED volumes, popularized by productions like The Mandalorian and real time rendering with Unreal Engine, allows immediate visual feedback and faster workflows. While cloud based collaboration platforms like AWS, Google Cloud and Autodesk Flow let global teams work seamlessly. AI-Assisted Workflows are increasingly integrated to automate routine tasks of VFX artists, such as rotoscoping, environment creation and facial animation, becoming a standard part of the VFX artist’s toolkit.

Demand surges driven by streaming platforms, gaming, augmented reality (AR), virtual reality (VR) and advertising content are rapidly expanding the market for VFX. The global Visual Effects (VFX) market size in 2025 is estimated at around USD 11.19 billion according to Precedence Research, with projections to reach USD 20.29 billion by 2034 at a CAGR of 6.83% from 2025 to 2034.