TL;DR

Sora AI 2 is OpenAI's next‑gen text‑to‑video, image to video with audio model that turns prompts (and images) into hyper‑real short clips with synchronized audio, improved physics, and stronger safety. It's available via vosu.ai (access Sora 2 and Sora 2 Pro in the Vosu.ai app for Text‑to‑Video and Image‑to‑Video both modes generate audio by default), sora.com and the Sora iOS app; API access is expected next.

What is Sora AI 2?

Sora AI 2 is OpenAI’s latest text‑to‑video and audio‑generating model designed to create lifelike, coherent video clips with motion that respects physical dynamics and sound that matches on‑screen action. Compared to earlier systems, Sora 2 emphasizes temporal consistency (identity/scene stability), greater steerability (follows direction closely), and a broader stylistic range (cinematic, animated, photorealistic, surreal). It’s available via sora.com and a new Sora iOS app (invite‑only at launch in U.S./Canada), with API access signaled for the future.

Why it matters: Sora 2 narrows the gap between ideas and finished cinematics for marketing, education, and filmmaking, while shipping with a layered safety stack (input/output moderation, provenance/watermarking, youth safeguards, and likeness controls).

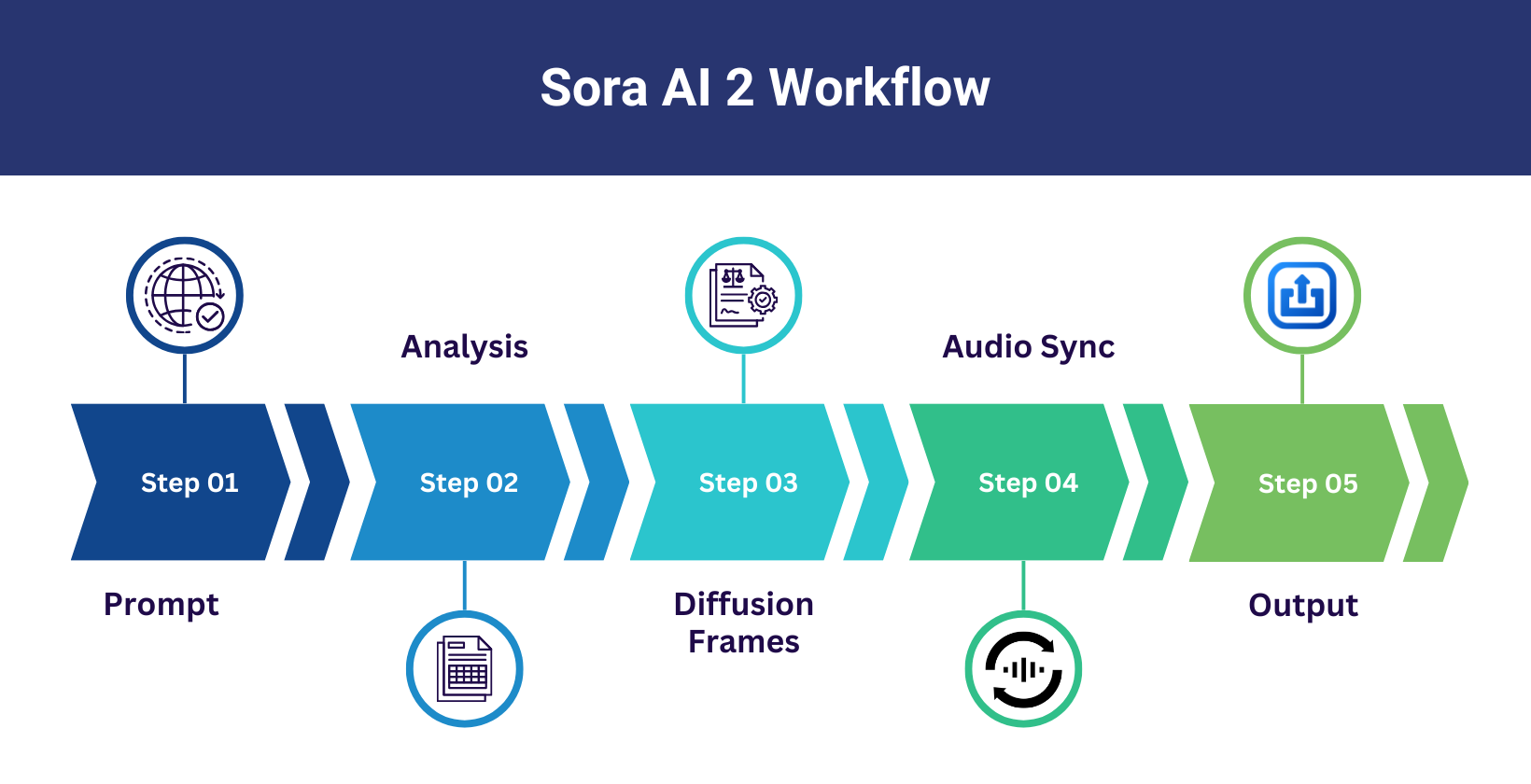

How does Sora AI 2 work?

Sora 2 combines transformers (to interpret prompts and plan) with diffusion (to iteratively denoise frames) and adds temporal reasoning for stability across time, plus audio generation/sync aligned to visuals.

Sora 2 combines transformers (to interpret prompts and plan) with diffusion (to iteratively denoise frames) and adds temporal reasoning for stability across time, plus audio generation/sync aligned to visuals.

5 steps (temporal logic)

- Input: Text prompt (and optional image) describing scene, style, motion, and constraints.

- Planning: Transformer stack parses entities, relationships, camera cues (e.g., “slow dolly,” “35 mm,” “noir”), and soundtrack hints to form a scene plan.

- Generation: Diffusion backbone shapes noise → frames per plan; object permanence and physics cues enforced across frames.

- Audio: Speech/SFX/music generated and aligned to timing and motion (e.g., footfalls on cuts), with unsafe content blocked.

- Safety & delivery: Prompts/frames/transcripts pass multi‑modal safety checks; approved results ship with provenance (e.g., C2PA metadata, visible moving watermark for app/web downloads).

Key features

- Hyper‑real rendering: Sharper lighting, textures, and materials with fewer temporal artifacts.

- Physics & continuity: Better collisions, fluids, cloth; stable tracking of characters across cuts.

- Audio that fits: Generated speech/SFX/music synced to action.

- Expanded styles: Cinematic, animated, photoreal, surreal; supports creative remixes in the app.

- Steerability & fidelity: Honors camera/lens cues, moods, multi‑character direction.

- Responsible defaults: Prompt/output moderation, stricter youth safeguards, likeness controls.

- Provenance & watermarking: C2PA metadata and visible moving watermark on app/web downloads.

- Availability: Short‑clip focus in the iOS app; invite rollout; API coming; web via sora.com.

Model data & filtering

Like OpenAI’s other models, Sora 2 was trained on diverse datasets, including public internet data, licensed/partner data, and data from users/human trainers. The pipeline applies rigorous filtering for quality and risk mitigation, and combines safety classifiers to help prevent the use or generation of harmful or sensitive content (e.g., sexual content involving minors).

Product & usage policies

OpenAI’s usage policies (communicated in‑product and publicly) prohibit:

- Violations of others’ privacy, including using another person’s likeness without permission.

- Threats, harassment, non‑consensual intimate imagery, content inciting violence or suffering.

- Impersonation, scams, fraud, and misleading content.

- Use that exploits, endangers, or sexualizes minors.

Enforcement: In‑app reporting, automated + human review, penalties/removals, and teen‑appropriate feed filtering.

Provenance & transparency

To improve transparency and traceability of generated media, OpenAI implements:

- C2PA metadata on assets to provide verifiable origin.

- Visible moving watermark on videos downloaded from sora.com or the Sora app.

- Internal detection tools to help assess whether certain video/audio was created by OpenAI products.

Note: Provenance is an evolving ecosystem; OpenAI indicates ongoing investment in stronger, interoperable tools.

Specific risks & mitigations

- Harmful or inappropriate outputs: Automated scanning over prompts, frames, scene descriptions, and audio transcripts; proactive detection and stricter thresholds for surfaced content.

- Misuse of likeness & deceptive content: At launch, no video‑to‑video; no public‑figure text‑to‑video; blocking generations of real people except through explicit, opt‑in “cameo” likeness controls; additional classifiers for racy/nudity/graphic violence and fraud‑related misuse.

- Child safety: Dedicated CSAM safety stack; partnership with NCMEC; scanning across inputs/outputs in 1P and API contexts (unless strict opt‑out criteria are met by enterprise customers).

- Teen safety: Higher moderation thresholds for minor users and when minors appear in uploads/cameos; teen‑appropriate public feed; stricter privacy defaults plus parental controls in the app.

Uses and applications

Marketing & Advertising

- Where it helps: Fast iterations, product teasers, brand explainers, multi‑variant social.

- Speed‑to‑asset: Ship 6–10 s teasers per concept; iterate colors/copy/CTAs.

- Personalization: Segment variants (region backdrops/VO).

- UGC‑style spots: Handheld/POV aesthetics for TikTok/Reels.

- Prompt to try: “10‑second 9:16 TikTok ad, glossy black smartwatch rotating on a mirror‑finish turntable, neon reflections, macro close‑ups, bold kinetic typography ‘Smarter. Faster. Better.’ studio lighting; whoosh SFX synced to text.”

Education & E‑Learning

- Where it helps: Visualize abstract topics, simulate risky labs, localize training.

- Explainers: Science processes, math intuition visuals, labeled diagrams.

- Accessibility: Alternate language narration and captions.

- Prompt to try: “Photosynthesis explainer: stylized plant cell, chloroplasts glowing as photons arrive; captions summarize each step; soft narration cadence; slower cuts for readability.”

Filmmaking & Creative Media

- Where it helps: Pre‑vis/storyboards, tone boards, style tests, interstitials.

- Stylization: Noir, anime, watercolor, VHS, cel‑shaded variants.

- Prompt to try: “Film noir: trench‑coated detective, rain‑soaked alley, neon reflections on wet asphalt, 24 fps, 35 mm lens bokeh; smoky sax motif; slow dolly‑in; high‑contrast chiaroscuro.”

Deep dive for anime workflows.

Social Creators & Influencers

- Where it helps: Trend remixes, challenges, consented cameos in the app.

- Series formats: Repeatable hook → reveal → CTA.

- Prompt to try: “POV vlog intro: first‑person walking into a hidden café, soft lo‑fi beat, quick whip‑pan transitions; end with text overlay ‘Day 3 in Kyoto’.”

Sora 2 vs alternatives (Investigational intent)

Below is pragmatic guidance based on late‑2025 positioning, output focus, and app UX.

- Sora AI 2: Short, high‑fidelity, app‑friendly clips (invite rollout); audio‑sync; realism/physics/continuity; social remix with consented cameos. Best for pre‑vis, teasers, social creation.

- Google Veo 3: Short‑to‑mid clips; lip‑sync/music; cinematic textures, some 4K contexts. Best for realistic talking scenes and high‑fidelity teasers.

- Runway (Gen‑4/Gen‑3 family): Short clips; editor‑led workflows; mature suite and integrations. Best for all‑in‑one creation/edit pipelines.

- Pika: Short social clips; fast, social‑first vibe. Best for quick meme‑able content.

- Stability (video): Variable fidelity and tooling; open ecosystems. Best for developer/open workflows.

Sources/notes: Veo 3 is often cited for lip‑sync/music and cinematic textures; Runway is widely used as an end‑to‑end suite; Sora 2 emphasizes audio+physics+provenance and a social app model with API coming. Evaluate by fidelity needs, delivery timeline, and stack integration.

Full head‑to‑head scorecard VosuAI

Safety stack (trust layer)

OpenAI documents a layered safety stack for Sora 2:

- Prompt blocking: Disallows policy‑violating inputs pre‑generation.

- Output blocking: Post‑generation scanning of frames, captions, and audio transcripts with multi‑modal classifiers and a reasoning monitor (e.g., CSAM, graphic violence).

- Youth safeguards: Stricter thresholds for under‑18 users; tighter checks when potential minors appear in uploads/cameos; teen‑appropriate public feed.

- Likeness controls: No public‑figure generations at launch; explicit consent (“cameos”) for real‑person likeness; extra detectors for non‑consensual/racy content.

- Provenance: C2PA metadata on assets, visible moving watermark on app/web downloads, internal detection tooling.

- Enforcement: In‑app reporting; combined automation + human review; penalties/removals for violations.

Takeaway: For brands and schools, provenance and safeguards help manage regulatory/reputational risk while enabling creative output.

Red teaming

External testers from OpenAI’s Red Team Network probed disallowed and violative categories (e.g., sexual content, nudity, extremism, self‑harm, wrongdoing, violence/gore, political persuasion), attempted jailbreaks, and stress‑tested product safeguards. Insights informed improvements to prompt filters, blocklists, classifier thresholds, and other mitigations.

Safety evaluations

OpenAI evaluated the safety stack with thousands of adversarial prompts, running outputs through helpful‑only model variants and automated grading. Two key metrics:

- not_unsafe (recall): how effectively unsafe content is blocked at output.

- not_overrefuse: how well benign content avoids false blocks.

Reported aggregate results (category → not_unsafe / not_overrefuse):

- Adult Nudity/Sexual Content (without likeness): 96.04% / 96.20%

- Adult Nudity/Sexual Content (with likeness): 98.40% / 97.60%

- Self‑harm: 99.70% / 94.60%

- Violence & Gore: 95.10% / 97.00%

- Violative Political Persuasion: 95.52% / 98.67%

- Extremism/Hate: 96.82% / 99.11%

Continued work on safety & deployment

OpenAI indicates ongoing iteration of safeguards (e.g., age prediction, stronger provenance), fine‑tuning of classifiers/thresholds, policy refinements, and staged access (invite‑based rollout, controlled capabilities) as real‑world usage evolves. System Card date reference: 2025‑09‑30.

Future of text‑to‑video (2025–2026)

- Longer, dialog‑driven narratives and scene stitching.

- Real‑time generation/editing (interactive authoring of camera, lighting, VO).

- AR/VR pipelines (classrooms, virtual production walls).

- Safer, provenance‑by‑default media (watermarking/C2PA ubiquity).

FAQs (Conversational intent)

Q: What is Sora AI 2? A: OpenAI’s newest text‑to‑video+audio model that generates realistic, physics‑aware, sound‑synchronized clips from prompts.

Q: How is it different from Sora 1? A: Improved realism/physics, stronger instruction‑following, synchronized audio, broader styles, and a more robust safety stack.

Q: Where can I use it today? A: Sora iOS app (invite‑only) and sora.com; API is expected.

Q: How long can clips be? A: The app emphasizes short clips (e.g., ~10 s for rapid creation/remix). Limits may evolve.

Q: Does it generate audio? A: Yes speech, music, and SFX aligned to visuals.

Q: Can I upload images or video? A: Text and image starts are supported; video‑to‑video is limited/controlled at launch for safety.

Q: Is it safe for brands and schools? A: Yes prompt/output moderation, youth safeguards, likeness rules, and provenance (C2PA+watermarks).

Q: Can I deepfake a celebrity? A: No public‑figure generations are blocked. Cameos require explicit consent.

Q: What’s next for Sora? A: Continued iteration, API availability, and evolving guardrails as usage scales.